DoltHub is the place to share Dolt’s version controlled databases. Last month, we created a migrate button that automatically migrates your Dolt databases to the latest storage format. We’ve since migrated all ~100 public databases on the DoltHub organization name! This is a milestone towards the release of the new format. In this blog, we’ll talk about why we set this goal and what problems it helped us find.

Why migrate the DoltHub organization?#

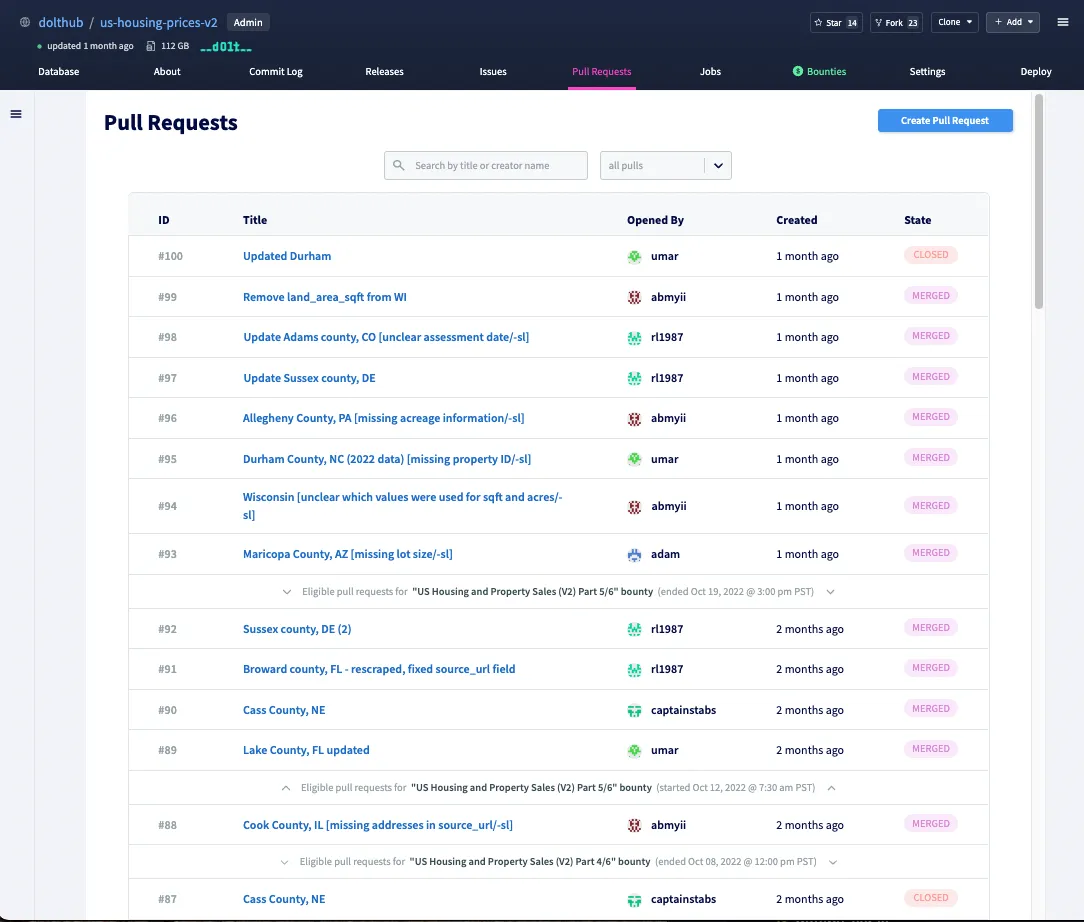

The DoltHub organization hosts some of the most complex databases on DoltHub which are perfect for testing out the migration. Just look at any of the databases created by a Data Bounty like dolthub/us-housing-prices-v2. They have plenty of commits and merges, host tables with realistic schema, and are fairly large.

In addition, the databases have been created with a wide array of Dolt versions. The team has shipped 200 different releases of Dolt since the first public release. Dolt has changed rapidly over the years and often functionality would change in subtle ways. Testing on databases that have been modified by different versions of the software makes for a realistic testing ground.

How did we do it#

The first step of migrating all of DoltHub’s databases was getting a list of them

and their corresponding formats. 7.18 and __LD_1__ are the old formats. __DOLT__ is the new format. Here’s the script we used to fetch the information:

# Accept an owner name as the first parameter to the script

owner=$1

# $token is the auth token

auth_cookie="dolthubToken=$token"

# Graphql query to fetch the database names

query=$(cat <<EOF

query GetDatabaseNames(\$ownerName: String!, \$pageToken: String) {

repos(ownerName: \$ownerName, pageToken: \$pageToken) {

list {

_id

}

nextPageToken

}

}

EOF

)

query=$(echo $query | tr -d '\n')

# Execute the graphql query and save the page token so that we can

# fetch all the pages.

do_query()

{

if [ "$nextPageToken" != "" ]

then

vars="{\"ownerName\": \"$owner\", \"pageToken\": \"$nextPageToken\"}"

else

vars="{\"ownerName\": \"$owner\"}"

fi

payload=$(cat <<EOF

{"query": "$query","variables": $vars}

EOF

)

resp=$(curl -s --location --request POST 'https://www.dolthub.com/graphql' \

-b $auth_cookie \

--header 'Content-Type: application/json' \

--data "$payload")

echo $resp | jq -r '.data.repos.list | .[] | ._id'

nextPageToken=$(echo $resp | jq -r '.data.repos.nextPageToken')

}

# Fetch the first page

do_query

# Fetch the rest of the pages

while [ "$nextPageToken" != "" ]

do

do_query

doneWe fed the list of database names into a similar script that fetches the storage format for each database. Here is some sample output:

> ./get_repo_names_for_owner.sh 'dolthub' | xargs -L 1 ./get_storage_format_for_repo.sh

dolthub/quest,__DOLT__

dolthub/census2020,__LD_1__

dolthub/units-of-measure,__DOLT__

dolthub/im-interested,__LD_1__

dolthub/stock-market,__DOLT__

dolthub/product-analytics,__DOLT__

dolthub/million-songs,__LD_1__

dolthub/neural-code-search-evaluation,__DOLT__

dolthub/country-codes,__LD_1__

dolthub/classified-iris-measurements,__LD_1__

....We loaded this into Google Sheets and used it to keep ourselves organized.

From there, part of the job was playing Cookie Clicker. We clicked the migrate button for many repos at once and monitored the jobs. Some databases would migrate fairly quickly and some would take hours. Some databases never completed at all.

You can’t win it all sometimes#

For a couple databases it was not worth the effort to migrate them. Some of these databases were over three years old and were made with a very early format of Dolt, 7.18. Others were victims of old format bugs that made the migrations fail in the validation phase. One database, dolthub/us-housing-prices, had duplicate entries for the same primary key. We wrote a custom tool to fix those duplicates when we migrated it for dolthub/us-housing-prices-v2.

For these databases, the best we could do is dump all the data at the tip of the history and import it into a new database. It’s unfortunate that this drops this history. The following script helped do that. You can use it by running it from the database’s directory. Please note that you will have to drop any foreign keys before exporting.

#!/bin/bash

# Double check that we are in the right directory

output=$(dolt status)

if ! [[ "$output" =~ "nothing to commit" ]]; then

echo "database missing or dirty, please commit all changes"

exit 1

fi

# Get the name of the current folder and make a new folder with -new suffixed.

db_name=$(basename $PWD)

new_name="$db_name-new"

tbl_names=$(dolt ls | tail -n +2 | xargs)

mkdir ../$new_name

cd ../$new_name

dolt init

cd ../$db_name

# For each table

for table_name in $tbl_names

do

# Get the create table statement for the table to ensure that the schema

# of the new table is the same.

dolt sql -r json -q "SHOW CREATE TABLE $table_name" | jq -r '.rows[0]."Create Table"' > $table_name.sql

# Export the table data to csv

echo "exporting $table_name"

dolt table export $table_name $table_name.csv

cd ../$new_name

# Create the new table

dolt sql < ../$db_name/$table_name.sql

# Import the data

echo "importing $table_name"

dolt table import -u -continue $table_name ../$db_name/$table_name.csv

cd ../$db_name

done

cd ../$new_name

# Create a commit

dolt commit -Am "Import data from existing database"Conclusion#

In the following weeks, you will hear more from the team at DoltHub on a deprecation schedule for the old format. We urge you to migrate your databases if possible. It’s time to get your new format Dolt!

Of course, please reach out to the team on Discord if you have any questions or if we can help you migrate your database.

Additional reading#

If you would like to learn more about the new storage format, you can read these blogs:

-

How to chunk your database into a Merkle Tree (June 27, 2022)

-

Don’t change your open-source database’s storage engine (July 20, 2022)

-

How we benchmark Dolt (August 3, 2022)

-

Migrating Dolt’s Binary Format (August 11, 2022)

-

Why we chose Flatbuffers (August 22, 2022)

-

New Storage Format Fundamentals & Benchmarks (September 30, 2022)

-

Migrate your database on DoltHub (November 1st, 2022)