We’re building Dolt, the world’s first SQL database with Git-like version control. Dolt has a large test suite, partly in golang unit tests and partly in bats, a framework for writing tests that run in Bash and can easily test many interactions with standalone programs.

Testing some of Dolt’s features, like standby replication, requires spawning and coordinating multiple processes. Bats and Bash wasn’t the right tool for the job. Luckily, Go has great facilities for spawning and interacting with background processes. While running processes from Go is not as concise as running them from the shell, it has robust facilities for error handling and concurrency.

This is a short synopsis of some useful patterns for interacting with the os/exec package, which been more than sufficient for almost all of our needs thus far.

Basic Usage#

The basic usage is to construct a *exec.Cmd using exec.Command(), configure

any fields on the Cmd struct which need customization, and then run the

process with one of Run(), Output(), or CombinedOutput() or by calling

Start() followed by Wait(). A simple example is something like:

package main

import "os/exec"

func main() {

cmd := exec.Command("ls", "/usr/local/bin")

err := cmd.Run()

if err != nil {

panic(err)

}

}If you just need to run the process and wait for completion and you do not need

to interact with the process in any way while it is running, use Run() or one

of the Output() methods.

Start() followed by Wait() is for when you need the option to interact with

and monitor the running process. After Start() is called the struct’s

Process field will be populated and can be used to send signals to the

running process, for example. The field will also be populated after a call to

Run() or one of the Output() methods, but it’s not possible to safely use

it concurrently during the call, because there’s no way to form a

happens-before relationship between the caller of Run() and any concurrent

process which might want to read the Process field. For that reason, if you

need to preemptively kill the process or read its Process state for any reason,

you should use Start() and Wait() explicitly.

Sharing Standard Output#

If you run the above program, you’ll notice that it exits 0 without producing

any output. By default, os/exec runs the processes with stdin, stdout and

stderr all connected to /dev/null. By setting the fields Stderr, Stdout

and Stderr on the Cmd struct before you start the process, you can

redirect these to files or simply standard Go io.Readers and io.Writers.

One potentially useful redirect is to let the new process inherit the spawning

process’s stdout and stderr. This is easy to accomplish as:

cmd := exec.Command("ls", "/usr/local/bin")

cmd.Stdout = os.Stdout

cmd.Stderr = os.Stderr

return cmd.Run()This is often appropriate if the spawning process is simply a wrapper around running another set of commands or a pipeline.

Capturing Output#

Another common pattern is to capture the output to a file or to a buffer in the

spawning process. To capture a combined log of stdout and stderr, for example,

you simply need to set the fields to a file which you get from os.Open() or

os.Create():

log, err := os.Create("output.log")

if err != nil {

return err

}

defer log.Close()

cmd := exec.Command("ls", "/usr/local/bin")

cmd.Stdout = log

cmd.Stderr = log

return cmd.Run()This is equivalent to something like:

$ ls /usr/local/bin >output.log 2>&1on the shell.

You can also easily capture the output to a buffer, which you can process from Go after the process has run:

buf := new(bytes.Buffer)

cmd := exec.Command("ls", "/usr/local/bin")

cmd.Stdout = buf

err := cmd.Run()

if err != nil {

return err

}

ProcessBuffer(buf)One caveat of doing that is that if the spawned command generates a lot of

output, the Buffer can grow without bound until the spawning process runs out

of memory. You can process the output incrementally by using

*Cmd.StdoutPipe(). You should call StdoutPipe before you start or run the

process. StdoutPipe will return an io.ReadCloser which you can read from

incrementally. It will be closed by os/exec when the spawned process

terminates, at which point it is safe to call Wait(). As an example:

cmd := exec.Command("ls", "/usr/local/bin")

stdout, err := cmd.StdoutPipe()

if err != nil {

return err

}

scanner := bufio.NewScanner(stdout)

err = cmd.Start()

if err != nil {

return err

}

for scanner.Scan() {

// Do something with the line here.

ProcessLine(scanner.Text())

}

if scanner.Err() != nil {

cmd.Process.Kill()

cmd.Wait()

return scanner.Err()

}

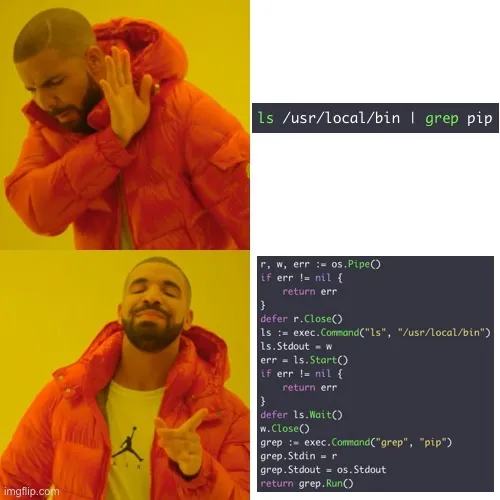

return cmd.Wait()Piping Between Processes#

In unix, it’s common to have small utility programs which are connected by

pipes. This is quite easy to accomplish with os/exec and os.Pipe(). Some

care should be taken with the lifecycle of the pipe files so that processes

naturally run to completion. In particular, the write side of the pipe can be

closed after the writer is started, since the new process has inherited the

file descriptor.

r, w, err := os.Pipe()

if err != nil {

return err

}

defer r.Close()

ls := exec.Command("ls", "/usr/local/bin")

ls.Stdout = w

err = ls.Start()

if err != nil {

return err

}

defer ls.Wait()

w.Close()

grep := exec.Command("grep", "pip")

grep.Stdin = r

grep.Stdout = os.Stdout

return grep.Run()This is equivalent to something like:

$ ls /usr/local/bin | grep pipon the shell.

errgroup and CommandContext#

With CommandContext, os/exec makes it easy to tie the lifecycle of a

spawned process to a Context. When the Context’s Done() channel delivers,

the process will receive a KILL signal if it is still running.

This can be combined with

errgroup to run a collection

of processes together and to terminate the collection if any of them exit

non-zero. As a silly example:

eg, ctx := errgroup.WithContext(context.Background())

sleeps := make([]*exec.Cmd, 3)

sleeps[0] = exec.CommandContext(ctx, "sleep", "100")

sleeps[1] = exec.CommandContext(ctx, "sleep", "100")

sleeps[2] = exec.CommandContext(ctx, "sleep", "notanumber")

for _, s := range sleeps {

s := s

eg.Go(func() error {

return s.Run()

})

}

return eg.Wait()Process Groups and Graceful Shutdown#

By default on Unix-like operating systems, spawned processes will inherit the

process group of the Go binary which is calling *Cmd.Start(). This means that

keyboard generated interrupts which are delivered to the Go binary will also be

delivered to the spawned processes. If you want to shutdown the spawned

processes in a particular order or with particular signals then this may not be

what you want. To detach the spawned processes from the spawning process’s

process group, you can set Stdpgid: true within a *syscall.SysProcAttr

assigned to the Cmd.SysProcAttr field before you run the process. Here is a

full example, where we want a proxy process to start before a server process,

and we want to fully terminate the server process before we stop the proxy

process.

sigch := make(chan os.Signal, 1)

signal.Notify(sigch, os.Interrupt, syscall.SIGTERM)

defer signal.Stop(sigch)

proxy := exec.Command("bash", "-c", `

trap "echo proxy exiting" EXIT

echo "proxy started"

sleep 100

`)

proxy.Stdout = os.Stdout

proxy.SysProcAttr = &syscall.SysProcAttr{

Setpgid: true,

}

server := exec.Command("bash", "-c", `

trap "echo server exiting" EXIT

echo server started

sleep 100

`)

server.Stdout = os.Stdout

server.SysProcAttr = &syscall.SysProcAttr{

Setpgid: true,

}

err := proxy.Start()

if err != nil {

return err

}

defer proxy.Wait()

err = server.Start()

if err != nil {

proxy.Process.Kill()

return err

}

go func() {

_, ok := <-sigch

if ok {

server.Process.Signal(syscall.SIGTERM)

server.Wait()

proxy.Process.Signal(syscall.SIGTERM)

}

}()

return nilWhen you run this example, it will sleep for 100 seconds in both spawned

processes and exit gracefully. If you send it an interrupt from the terminal

using Ctrl-C, it will always print "server exiting" before it prints "proxy exiting", since the process groups have been detached and the code is

constructed to wait for the server to stop before it stops the proxy.

Note that this example will only compile on Unix-like operating systems, as it

depends on system-specific exports from syscall, such as the SysProcAttr

struct’s Setpgid field.

Closing Thoughts#

Hopefully the above cookbook of useful and common os/exec patterns comes in

handy if you need to spawn processes and interact with them from a Go program.

Like many aspects of the Go standard library, we’ve generally found the

interface well thought out, well documented, and well implemented. By

converting our previous bats tests which covered sql-server functionality so

the processes were managed from within Go, we’ve managed to reduce their

runtime and improve their robustness and their ability to provide useful

diagnostics when things fail.

We also use the above patterns for utility programs throughout our code base, especially for things like developer tools or service-level integration test runners where a number of dependencies need to reliably come up and their lifecycle be managed and monitored across the test run. While Go isn’t the most concise language for implementing things like pipelines and process groups, we find the functionality available in the standard library and our ability to implement robust output and error handling from within Go quite compelling.