DoltHub SQL Assistant Technical Details

Last week, we announced the DoltHub SQL Assistant, an LLM-powered SQL assistant that helps you write SQL queries on DoltHub. In today's post, we'll dive into the technical details of how DoltHub's SQL Assistant works behind the scenes.

The diagram above shows the basic deployment architecture for DoltHub's API and includes the changes we've made to power the new SQL Assistant feature.

The large blue square in the center represents a Kubernetes Node, or host, that runs DoltHub's main API. We run DoltHub on AWS's managed Kubernetes service, EKS, and each of these API nodes receives multiple DoltHub API Deployments for serving requests from DoltHub.com.

In addition to the DoltHub API Deployment on these nodes are a secondary set of deployments we call Asyncprocessors. These will be explained in more detail later on, when their role in the SQL Assistant's functionality and their relationship with Amazon's SQS service are discussed.

On the right side of the diagram is DoltHub's application database. This database is a Hosted Dolt instance, used for storing DoltHub.com's application data. Both DoltHub API and the Asyncprocessors read and write to this database. This runs on a separate host, outside of the DoltHub Kubernetes cluster, as it is instead provisioned and managed by Hosted Dolt.

Finally, on the left side of the diagram are the Ollama server deployments. These deployments are shown atop red squares. These squares represent separate Kubernetes nodes that have GPUs attached to them. Each Ollama deployment gets its own dedicated node. Notice too that the Ollama deployments are currently running deepseek-r1 models, which are a family of LLM models developed by DeepSeek.

Why did we choose deepseek-r1? Let's take a closer look at how we decided on this particular model and deployment strategy.

Choosing the deployment strategy and model

The SQL Assistant feature on DoltHub is the first of who knows how many LLM-powered features we'll be adding, and each feature will likely require its own unique model/set of models to perform its task. For this reason, we decided to deploy Ollama servers that can run multiple open-source models out-of-the-box, as long as the model manifests are available on the Ollama server. It can also handle concurrent requests to different models.

For our deployment, we came up with a cheeky, pull-first Ollama server Dockerfile that I wrote about recently. This allows us to pull our desired model(s) before the Ollama server is ready to serve traffic. For the SQL Assistant feature, our Ollama servers pull and run the deepseek-r1:latest model.

When choosing the right model for the SQL Assistant feature, we wanted a single, stateless model that could handle a one-shot prompt and output a SQL query. Our criteria for this model were:

- It must be open-source

- Here it's important to note we want to be able to run the model ourselves because:

- We will potentially be sending private database schema information in the prompt.

- We want to learn how to deploy and operate LLMs generally.

- We prefer open-source because we are an open-source company.

- The previous three requirements trump a cost-per-query implementation, although we have discussed using a cost-per-query option for public databases in the future.

- Here it's important to note we want to be able to run the model ourselves because:

- It must be able to write MySQL-compatible SQL.

- It must be able to translate natural language to valid, relevant SQL.

- It must be able to translate other flavors of SQL to the MySQL dialect.

- It must be able to read MySQL database schemas and generate a query against that schema based on the user's request.

- It must be able to return results in a structured JSON format.

Now, there are a number of models out in the ether which claim to meet these criteria, and even have published benchmarks to attempt to prove their claims. However, most of these models suck, actually. I tested a few of them on my local machine, just to see how they fared, and the only model I found remotely useful was the deepseek-r1 model.

I even ran llama3.1, Facebook's flagship LLM, and it was not good. It kept returning python scripts for generating SQL queries, instead of generating the queries themselves.

Now, I have much more to say about my experience so far experimenting with various models, but I will save that for next week's post. Just know that of the models I could actually run locally, deepseek-r1 was the best, so we went with that for SQL Assistant v1.

The SQL Assistant request lifecycle

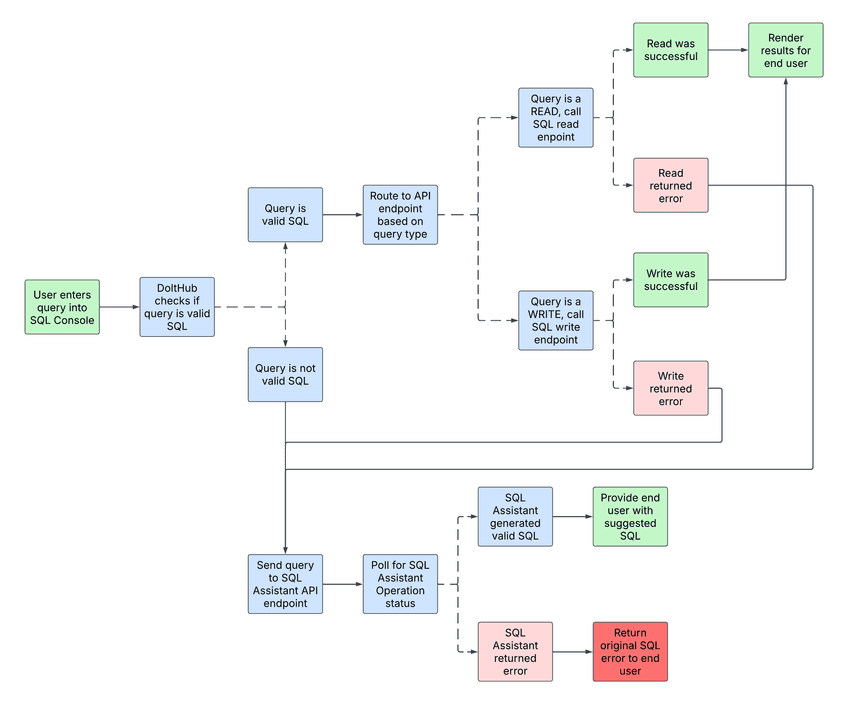

Once our infrastructure was updated with Ollama deployments, we were able to implement the SQL Assistant feature. I think it's most helpful to understand the request lifecycle of the SQL Assistant feature to understand how it works under-the-hood. To aid in presenting this, I've created the above diagram that shows the business logic flow of the SQL Assistant feature. The diagram starts on the left side with the user running a query in the SQL Console. On DoltHub, when a user runs a query in the SQL Console, the request is routed to either a SQL read query API endpoint or a SQL write query API endpoint, depending on the type of query the user runs. If the query was valid, and did not result in an error, the response is returned to the user and the SQL Assistant feature is not involved.

However, if the query is invalid, is a natural language query, or has resulted in an error, the query is routed to the SQL Assistant API endpoint instead. This endpoint is part of DoltHub's main API. Once DoltHub API receives this request, it does not immediately process the query by calling the LLM, but instead, it creates an asynchronous unit of work that it enqueues to Amazon's SQS service.

Let's break this down a bit more. The DoltHub API -> AWS SQS -> Asyncprocessor machinery used in DoltHub.com is actually not new. We use this asynchronous machinery whenever we need to do work in the API that takes longer than a few seconds to complete.

Instead of processing a request in real-time, DoltHub API creates a SQS message that contains all the information it needs for a different process, the Asyncprocessor deployment, to pick it up and complete the work.

work := func(ctx context.Context) (*domain.WorkResult, error) {

unitOfWork, err := s.NewUOW(ctx)

if err != nil {

return nil, err

}

defer unitOfWork.Close()

resp, err := unitOfWork.Chat().AnswerQuestion(ctx, repoDataUseCase, sqlQueryType, ownerName, repoName, refStr, req.Message)

if err != nil {

return nil, err

}

res, err := anypb.New(

&dolthubapi.ChatResponse{

Message: resp.Response,

})

if err != nil {

return nil, err

}

return &domain.WorkResult{Resp: res}, nil

}

o, err := createOperationAndCommitUOW(ctx, unitOfWork, callingUserID, req)

if err != nil {

return nil, err

}

if err = domain.RunOperation(ctx, OperationTxFactory(s.UnitOfWorkProvider), o, nil, work); err != nil {

return nil, err

}The snippet above is a simplified version of the code that DoltHub API uses to create a unit of work and enqueue a message to SQS. The work function is what will be written to the SQS queue and processed by the Asyncprocessor later.

This is where the actual call to the LLM is made, in the method unitOfWork.Chat().AnswerQuestion(). It was crucial that the call to the LLM be asynchronous, since the model can take an arbitrary amount of time to generate a response, and we don't want to block the main API while it's reasoning out a response.

During the call to AnswerQuestion(), the LLM is called via an HTTP request to the Ollama server, deployed alongside DoltHub API.

In this HTTP request, the LLM is given a prompt that contains the user's query and the DoltHub database schema for the particular branch that the user is querying. The prompt also contains instructions for how the LLM should respond to the prompt, so that the response can be parsed as JSON and returned to the user. In the current iteration of the SQL Assistant, the model's reasoning output is discarded, and only the JSON response is collected. It is also checked to ensure that the final message to the user contains a valid SQL query. Any other response is discarded as well.

Getting back to the work function, it is important to note that it is tracked by an Operation identifier which is persisted to the application database. This operation corresponds to the SQS message that is written to the queue. You can see the creation of the Operation with the call of createOperationAndCommitUOW() in the snippet above. The call to domain.RunOperation() is what actually writes the message to the SQS queue.

Then, in a separate process, the Asyncprocessor, which is actually just a DoltHub API deployment running in a different mode (asyncprocessor mode), picks up the message from the SQS queue and executes the work function. There is no time-limit on the work function, so it can take as long as it needs to to run. In this case, it will run for as long as it takes the LLM to reason about the user's query and generate a valid response. Once the work function completes, the Asyncprocessor writes the results of the work function to the application database.

At this point, you might be wondering how the end user, who wrote the original query, gets the final response from the LLM, since it all happens via this asynchronous, long-running machinery. Well, when DoltHub API received the initial request at the SQL Assistant API endpoint, it returned a response that includes the Operation identifier that corresponds to the work function that was enqueued to the SQS queue.

Then, on the frontend, DoltHub.com polls the API endpoint for the Operation's status using the Operation identifier it received from the SQL Assistant API endpoint. This way, when the Operation completes, the frontend can call the GetOperation API endpoint to get the resulting response that came from the LLM.

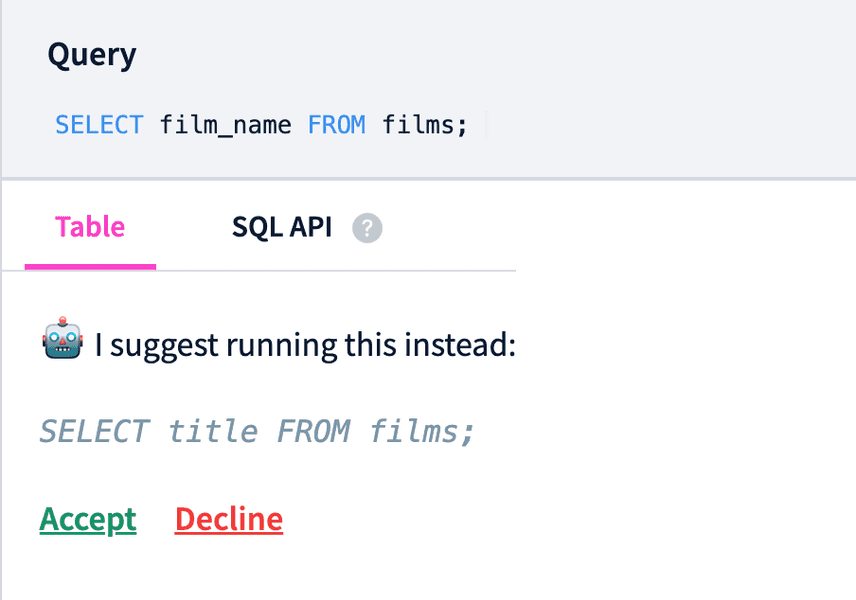

This final response is presented to the end user as the SQL Assistant's suggestion, as seen in the screenshot below.

Conclusion

If you haven't given the SQL Assistant a try yet, you should check it out. It is... not great in its current iteration. It is prone to hallucinations and spelling problems, but we're actively working on ways to improve it. We really think this particular use-case is one of the most compelling for LLM's today.

As always, we'd love to hear from you. If you have any suggestions or feedback, or just want to share your own experiences, please come by our Discord and give us a shout.

Don't forget to check out each of our cool products below:

- Dolt—it's Git for data.

- Doltgres—it's Dolt + PostgreSQL.

- DoltHub—it's GitHub for data.

- DoltLab—it's GitLab for data.

- Hosted Dolt—it's RDS for Dolt databases.

- Dolt Workbench—it's a SQL workbench for Dolt databases.