Things are going pretty well here at DoltHub. Dolt, the world’s first and only version controlled SQL database, is being used for a ton of use cases, the most popular of which are to version video game configuration or as a Machine Learning Feature Store.

But, we’re not resting on our laurels. We’re always looking for new and exciting ways Dolt’s unique version control features can improve existing or enable new workflows. An emerging area that caught our eye was agentic workflows. We think Dolt can really help for this use case. This article will explain.

Generative Artificial Intelligence#

The big software innovation of the last few years are generative artificial intelligence. With the release of OpenAI’s ChatGPT 3.5 in November 2022, computers were able to generate novel, realistic text from an input prompt using large language models (LLMs). The generated text was indistinguishable from what a human could produce, at least at first glance, across a number of domains. Image, audio, and video generation followed using the same fundamental transformer building blocks. Generative artificial intelligence (AI) is now the hottest thing in tech.

So, what are people using generative AI to do in real life? The first use case is a better, more conversational search, replacing Google’s list of blue links as a way to answer questions. Instead of typing your search query into Google, you type a similar query into a chat interface and the generative AI tailors a custom response to your question. If you have follow up questions, you have a conversation with the “Chat Bot”, often leading to easier, more in-depth learning experience than a traditional internet search. This alone is a big deal as Google Search has long been the most profitable and defensible product in tech.

But, as it turns out, generative AI has many other use cases. Many real world problems fall into the “generate novel, realistic X from an input prompt”. Software engineers generate working code and tests from a short template or a natural language prompt. Self driving cars like Waymo and Tesla use generative AI to predict the next moves of the cars and pedestrians around the vehicle and react accordingly. Generative AI can translate videos into multiple languages with realistic facial movements tied to the new language.

Beyond the hype, generative AI is a truly useful, ground breaking technology. The use cases listed above are salient because they are consumer-facing. Behind the scenes at companies, the use case getting a lot of interest is agentic workflows.

What are Agentic Workflows?#

Agentic workflows already exist, we just don’t call them that. Until the very recent past, humans were the agents so we called it issue tracking.

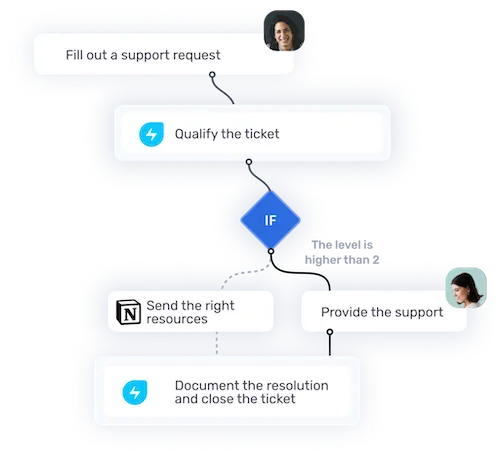

If you’ve ever emailed or called customer service, you were probably the customer of a potentially agentic workflow. At most companies, when you email customer support, a ticket is opened and customer service agents work your case through a issue tracking system. The case often involves a bit of back and forth with the customer. The agent often needs to ask some clarifying questions or confirm a resolution path. The issue tracking system manages this workflow prompting agents what work to do next, start working a new case or reply to an existing case.

Issue tracking systems aren’t just for customer service. If you work at a large company, especially at a desk job, it won’t surprise you that issue tracking systems are everywhere. You probably use one nearly every day. Engineers use issue tracking systems like GitHub Issues or JIRA for software bugs or projects. Security monitoring makes issues for abnormal access patterns or traffic. The “issue to case to work to resolution” pattern is a very common pattern used to track and manage work at companies. This pattern can be leveraged to create agentic workflows.

Enter Generative AI#

How does generative AI come in? The idea with agentic workflows is that some of the work currently being done by human agents in issue tracking systems can be done by generative AI agents. Agentic, in this case, is used specifically to refer to an AI agent, not a human agent. Generative AI agents are much cheaper than humans so even if an AI can do 5% of the work of a human, companies can save a lot of money.

Let’s go back to our familiar customer service example. Let’s say you ordered a new computer with a sick GPU to train some AI models but the computer arrived damaged. You email the online merchant you ordered from asking for a refund. Currently, a case would be opened in the online merchant’s issue tracking system with your email and other details of your account like your order history. A human would read your email, check your order history, and respond to your case. Maybe for large refunds, the online merchant requires a photo of the damaged item which you did not provide. The agent responds asking for the photo and then moves on to working another case. When you respond with the photo. Another agent is assigned the case, reviews the photo, decides it checks out, and issues a refund. After the refund is issued they resolve the issue. All in all, this may have taken 30 minutes of agent time at say $25/hour all in, so $12.50 to resolve your case.

The workflow diagram below is cribbed from lapala.io. The workflow and software described above is very common.

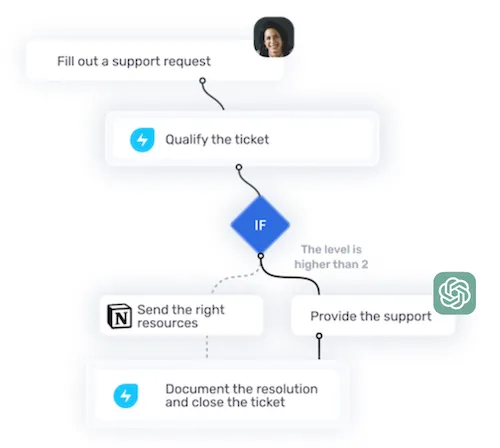

With generative AI agents, your email text and account details act as the prompt. An AI agent may be able to generate the “requires a photo” response. When you respond with the photo, that is added to the prompt and now an AI agent may be able to issue a refund. Let’s say a call to an AI agent costs $0.05. You just saved $12.40. This is in the fully automated case where the AI agent is fully empowered to respond to the customer.

Here’s the workflow diagram above where the human agent is replaced by an AI agent. As you can see, an AI agent fits in pretty seamlessly to these existing systems.

This isn’t just customer service. As I said, most workflows at companies are managed by a issue tracking system. Can AI agents pick up software bugs from the JIRA queue? Can AI agents respond to weird access patterns on your database? Agentic workflows are hot because the answer to this question is at least “sometimes” and even “sometimes” can save you a lot of money.

What’s the Catch?#

You’ll notice I said “may be able to generate the response” not “is able to generate the response”. As anyone who has used generative AI knows, these models are not 100% trustworthy. They make mistakes. “How many Rs are in the word strawberry?” is a famous example. The main challenge in agentic workflows is how to detect, prevent, or manage AI agent mistakes. Successful agentic workflows are successful at mitigating agent mistakes.

Human agents also make mistakes. Issue tracking systems have workflows to prevent mistakes, especially for high value tasks like the refund case above. Most issue tracking systems integrate the concept of human review. For instance, if a refund is issued, a manager must first review and approve. Can agentic workflows use the same approach to mitigate AI agent mistakes?

Human In The Loop#

Can agentic workflows use human review to catch mistakes? The answer is a resounding yes. This approach is so common it has a name: “human in the loop”. It’s my impression that a fully empowered AI agent is still uncommon. Most agentic workflows are human in the loop.

Continuing our example from above, an AI agent generates a “proposed response” for the human agent to review. If the response looks good, the human agent clicks accept and sends the response. Let’s say this saves the agent 50% of the time it would have taken to write the response themselves. This now saves $6.15 instead of $12.40, still a lot of savings.

And our familiar workflow diagram, this time with the human agent reviewing our AI agent’s work.

Clever use of human in the loop as a user interface to AI agents is a winning strategy. One of the most popular agentic use cases right now is software development through the integrated development environment (IDE), Cursor. If you have used Cursor, you know the AI agent generates code for you if you do certain actions. You review it, iterating until it’s to your liking. If you don’t like what the AI created, you just revert the changes because your code is in version control. How can the Cursor model be extended to more domains beyond software development?

Version Control#

I argue that the success of Cursor has a lot ot do with the objects the AI agent is operating on. Cursor operates on code stored in files under version control. The AI agent produces code for review that can easily be diffed against existing code. If the AI agent makes a mistake, the developer reverts the changes. If the change is big, the AI agent can make the change on a branch. The human can review the changes in bulk and merge the changes if they look good. Version control is a critical piece of the agentic workflow experience in Cursor.

The fundamental building blocks of version control, diff, revert, branch, and merge, might also be the fundamental building blocks of agentic workflows. Version Control allows thousands of software developers to collaborate asynchronously on large, complicated systems. Could version control also allow thousands of AI and human agents to collaborate asynchronously on large, complicated systems?

Given lack of version control, current agentic workflows outside of software development are limited to very simple tasks that must be overseen by human review. Could version control enable more and larger agentic workflows?

YOLO#

Alright Tim, my interest is piqued. Make your case.

Dear reader, look no further than Cursor itself. Cursor has YOLO Mode where you allow the AI to run commands that affect objects not under version control. Cursor will iterate through commands until the code generates the response you want. You Only Live Once (YOLO) is a warning that this is dangerous. The AI could delete a critical file on your file system. YOLO indeed. The message is clear, do not run agentic workflows on objects not under version control.

But what if you could turn YOLO into FAFO, fuck around and find out? What if the system the commands were operating on was also under version control? You could have YOLO mode operate on a branch and do whatever it wants. Fuck around, so to speak. We need the systems AI agents operate on to support branches. What is the data storage layer of almost every system in the world? It’s not files. It’s the humble SQL database and this is where Dolt comes in.

Turn YOLO into FAFO#

Dolt is the first and only version controlled SQL database. Dolt supports diffs, revert, branch, and merge on tables instead of files. You can swap Dolt in for any SQL database.

With Dolt, your AI agents operate on branches, separate from production data. In development, the AI agent can operate on a branch or even a clone that is never intended to be merged. Once you’ve tuned the agent enough that you think it’s competent to generate work that can be merged into production, have it start working on branches. When the agent thinks it’s done, it can request human review. The human can review the changes directly as a table diff or in a custom UI built on top of the same table data. In Dolt, diffs can be queried just like standard SQL tables. If the changes look good, merge the branch. Or, if the agent did something bad, just delete the branch. No harm, no foul. Dolt turns YOLO into FAFO.

It doesn’t stop there. Dolt branches are light weight so you can have thousands of them. Data in Dolt is structured so the breadth of possible diffs is more constrained than text in files. You could have thousands of agents operating on separate branches and if 99% of them are producing the same diff, you can treat that as consensus and merge one of the branches, deleting the rest. Dolt enables agentic review by consensus.

So, the promise of a version controlled database backing your agentic workflows is the agent can perform more complex tasks. We’ve shown an illustrative example of how agents are currently used to draft responses in customer service. Continuing that example, let’s say you also have a nightly clean up job that closes dead issues, bumps the priority of issues that have languished, and various other clean up tasks. This is operating on hundreds or thousands of issues nightly. Right now, you probably have this job sending canned responses and generally doing mechanical tasks. The promise of an AI agent is that this job can close more issues with personalized responses to customers and generally do more complicated clean up tasks that currently require human decision making.

If Dolt is backing your issue tracking system, you can create this nightly clean up agent with a branch and start experimenting immediately with a powerful, risky agentic workflow. This agent might delete some things. That’s ok. You’re working on live customer data but the production data is not at risk. You can evaluate the viability of your new agentic workflow by querying the diff produced by your experimental agent. You can even build a custom user interface for evaluation using React, Django, Rails, or any other standard website framework because Dolt exposes diffs as SQL tables. This interface can be repurposed for human review of the agent’s results later. Maybe you decide that it’s better to have 100 agents work on 100 separate branches and you only choose to merge the changes that 99% of branches agree on. When the changes look good either to the human or agent reviewer, merge the changes to main to ship the results to production. With Dolt this is all possible using standard SQL.

As you can see, Dolt brings Cursor-like agentic workflows to any application.

Conclusion#

This article argues that agentic workflows that write software, like those seen in Cursor, are successful because the objects being operated on by the agent are under version control. Dolt allows far more objects, anything in a SQL database, to be version controlled, vastly expanding the scope and scale at which AI agents can operate.

I begin and conclude this article with an image from the Matrix of Neo besting thousands of agents. Dolt is your Neo for agentic workflows. Do you have an agentic workflow use case for Dolt? Come by our Discord and tell us about it.