Well, we tried.

If you’re unaware, my last few posts have been about learning RAG and building a tool called Robot Blogger to generate blog posts for our company. We were hoping to see some real improvements in the quality of the generated posts, especially by using RAG in the robot-blogger. But, after few weeks of work and two weeks of running the robot-blogger, we’re confident in calling time-of-death on this project and stating our final conclusion: robot blogs suck.

As much hype as there is surrounding AI generation and technological strategies to improve it, like RAG, the sobering truth about the current state of things is that the content these models are generating is not good. And, not only is it not good, it’s actually harder trying to get it to produce good content than it is to just write the content yourself!

As proof of my wasted efforts, you can read the heavily engineered prompt and resulting generated blog post below if you’re curious.

Click to see Prompt

We recently had a new user join our Discord server with a question:

*How do I use a database hosted on DoltHub if I’ve only ever worked with CSV files?*

This user had no prior experience with SQL databases but was familiar with working with tabular data in CSV format.

Objective#

Write a comprehensive, beginner-friendly blog post that guides users with CSV-only experience through the process of using Dolt and DoltHub effectively.

By the end of the post, the reader should:

- Clearly understand the differences between a CSV file and a Dolt table containing the same data.

- Recognize the trade-offs between storing data in CSV format vs. using Dolt.

- Be able to find and query the same data in Dolt that they would normally access in a CSV.

The blog post should follow this structured format:

1. Introduction#

- Clearly state the premise for writing this blog (i.e., helping users transition from CSV to Dolt).

- Present the main argument: While CSV files are a simple and familiar way to store data, Dolt offers powerful advantages as a distributed, decentralized database with version control, efficient querying, and collaborative features.

- Briefly introduce what the reader can expect to learn in the blog.

2. Dolt vs. CSV: Understanding the Differences#

- Explain how CSV and Dolt both store tabular data, making them conceptually similar.

- Highlight key differences, such as:

- Static vs. Dynamic: CSV files are static snapshots of data, while Dolt allows for live, queryable data.

- File vs. Database: CSV files require external tools for data manipulation (e.g., Excel, pandas), whereas Dolt is a full SQL database.

- Version Control & Collaboration: Dolt tracks changes like Git, enabling rollbacks, diffs, and branching. CSVs do not.

- Performance & Scalability: Searching and filtering large datasets in Dolt is faster and more efficient than manually scanning CSV files.

- Include concrete examples where Dolt provides advantages, such as collaboration, auditing changes, and structuring data efficiently.

3. Searching for Data: CSV vs. Dolt#

- Walk the reader through the process of searching for specific data in a CSV file (e.g., using Excel filters, writing Python scripts, or manually scanning rows).

- Compare this to querying the same data in Dolt, demonstrating how simple SQL queries can make finding, filtering, and analyzing data much easier.

- Explain how the raw data is stored in both formats and why SQL querying in Dolt is more efficient.

- Provide an example query:

SELECT * FROM table WHERE column_name = 'desired_value';

and compare it to searching for the same data in a CSV using a text editor or script.

- Emphasize the real-world advantages of storing data in Dolt over CSV, especially for data integrity, searchability, and collaboration.

4. Conclusion & Call to Action#

- Reiterate the main takeaway: While CSVs are easy to use, Dolt offers powerful advantages that make managing and searching data more efficient.

- If the reader still prefers CSV, provide the command to export Dolt tables back to CSV:

dolt sql -r csv -q "SELECT * FROM table;"

Encourage them to join the DoltHub Discord community for support, ask questions, and learn more about Dolt’s capabilities.

Click to see generated blog post

---

title: "From CSV to Dolt: A Beginner’s Guide to Transitioning from CSV Files to Dolt Databases"

date: "2025-02-28"

author: "Robot Blogger"

featuredImage: ""

tags: "generated, ai"

---

If you’ve ever worked with tabular data, chances are you’ve used CSV (Comma-Separated Values) files. They’re simple, lightweight, and widely supported—making them a go-to format for data storage and sharing. But as your datasets grow or your needs become more complex, CSV files can start to feel limiting. That’s where Dolt comes in.

Dolt is a SQL database with Git-style versioning, and DoltHub is its online platform for sharing and collaborating on Dolt databases. Together, they offer powerful tools for managing, querying, and collaborating on data. For users who are familiar with CSVs but new to databases, Dolt provides an accessible way to level up your data management skills while retaining the simplicity of tabular data.

In this guide, we’ll walk you through the transition from working with CSV files to using Dolt. By the end, you’ll understand the key differences between CSVs and Dolt, learn how to search for data in Dolt, and see why Dolt is a better choice for managing and collaborating on data.

Dolt vs. CSV: Understanding the Differences#

At their core, both CSV files and Dolt databases store tabular data. However, the way they handle, manage, and interact with that data is fundamentally different. Let’s break it down:

1. Static vs. Dynamic#

- CSV: A CSV file is a static snapshot of your data. Once saved, it doesn’t change unless you manually edit it or overwrite it with a new version.

- Dolt: Dolt databases are dynamic and queryable. You can interact with your data in real-time using SQL queries, making it easy to filter, sort, and analyze data without modifying the original dataset.

2. File vs. Database#

- CSV: A CSV file is just that—a file. To manipulate or analyze the data, you need external tools like Excel, Python, or R.

- Dolt: Dolt is a full-fledged SQL database. It provides a built-in query interface, so you can interact with your data directly using SQL commands. No additional tools are required.

3. Version Control & Collaboration#

- CSV: When you make changes to a CSV, there’s no built-in way to track what changed, when, or why. Collaboration often involves emailing files back and forth, which can lead to confusion and versioning issues.

- Dolt: Dolt tracks changes to your data just like Git tracks changes to code. You can see a complete history of edits, compare versions (diffs), and even create branches to experiment with changes without affecting the main dataset. Collaboration is seamless, as multiple users can work on the same database simultaneously.

4. Performance & Scalability#

- CSV: Searching or filtering large datasets in a CSV can be slow and cumbersome, especially if you’re working with millions of rows. You might need to write custom scripts or use specialized software to handle large files.

- Dolt: Dolt is designed for performance. SQL queries allow you to search, filter, and analyze data efficiently, even for large datasets. Plus, Dolt’s indexing and schema support ensure your data is well-structured and easy to work with.

Concrete Example: Collaboration#

Imagine you’re working on a dataset with a team. In a CSV workflow, one person might accidentally overwrite someone else’s changes, or you might lose track of which version is the most up-to-date. With Dolt, every change is tracked, and you can easily merge updates from multiple collaborators. It’s like having Git for your data!

Searching for Data: CSV vs. Dolt#

Now that we’ve covered the differences, let’s look at a common task: searching for specific data. Here’s how it works in both CSV and Dolt.

Searching in a CSV File#

To find specific data in a CSV file, you might:

- Open the file in Excel and use filters to narrow down the rows.

- Write a Python script to search for the desired value programmatically.

- Use a text editor to manually scan the rows for the data you need.

While these methods work, they can be time-consuming and error-prone, especially for large datasets.

Querying Data in Dolt#

In Dolt, you can use SQL queries to search for data quickly and efficiently. For example, let’s say you’re looking for rows where the column_name equals 'desired_value'. In Dolt, you’d write:

SELECT * FROM table WHERE column_name = 'desired_value';

This query instantly retrieves the matching rows, no matter how large the dataset is. You can also sort, filter, and aggregate data with ease, all without modifying the original dataset.

How Data is Stored#

- CSV: Data in a CSV file is stored as plain text. While this makes it easy to share, it also means you need external tools to interpret and manipulate the data.

- Dolt: Data in Dolt is stored in a structured format with a defined schema. This ensures consistency and makes querying more efficient.

Real-World Advantages#

Let’s say you’re analyzing sales data. In a CSV workflow, you might write a Python script to calculate total sales for a specific product. In Dolt, you can achieve the same result with a simple SQL query:

SELECT SUM(sales) FROM table WHERE product = 'Product A';

The SQL approach is not only faster but also easier to share and reproduce, as the query itself serves as documentation for your analysis.

Conclusion & Call to Action#

CSV files are a great starting point for working with tabular data, but as your needs grow, they can become limiting. Dolt offers a powerful alternative, combining the simplicity of tabular data with the capabilities of a SQL database and the collaboration features of Git.

With Dolt, you can:

- Query data efficiently using SQL.

- Track changes and collaborate seamlessly with version control.

- Scale your workflows to handle larger datasets and more complex analyses.

If you’re still attached to CSVs, don’t worry—Dolt makes it easy to export your data back to CSV format. Just use the following command:

dolt sql -r csv -q "SELECT * FROM table;"

Ready to give Dolt a try? Download Dolt and start exploring the possibilities. If you have questions or need help, join our Discord community to connect with other users and the Dolt team.

Make the leap from CSV to Dolt today—you won’t look back!

I’ve spent the last few weeks fumbling around with these cutting-edge technologies, and I’ve spent countless iteration cycles trying to coax something halfway decent out of them. If you’ve held off on using them yourself, you’re honestly not missing anything right now. I will admit that I think learning and experimenting with these technologies is still really valuable, it’s good to follow along as things progress. But the proverbial AI bush has yet to bear any tasty fruits.

In today’s autopsy, I’ll shine a light on the hidden realities of trying to use AI to generate high-quality, meaningful long-form content. If you read this and realize we’re just dumb and you’ve found a way to get useful long-form content out of these models, please let me know, Tim will buy you a beer.

LLM’s are searchers, not thinkers#

This is the first fundamental truth about AI that is not shared explicitly with the laymen: LLM’s are searchers, not thinkers. Sure, they cosplay as thinkers, and present that way if you’ve ever held a conversation with an AI chatbot. But, under the hood, they’re just the entire internet compressed into a few gigabytes, and really good at searching it quickly. But turns out, this does not a thinker make.

Obviously, it would be nice if human thought and creativity were really just similarity searches of our memories. In that universe, LLM’s would be much closer to producing good long-form content. But it remains to be seen if this is actually how human thought works. If you’re not sure exactly what I’m talking about, let’s look at some meme examples.

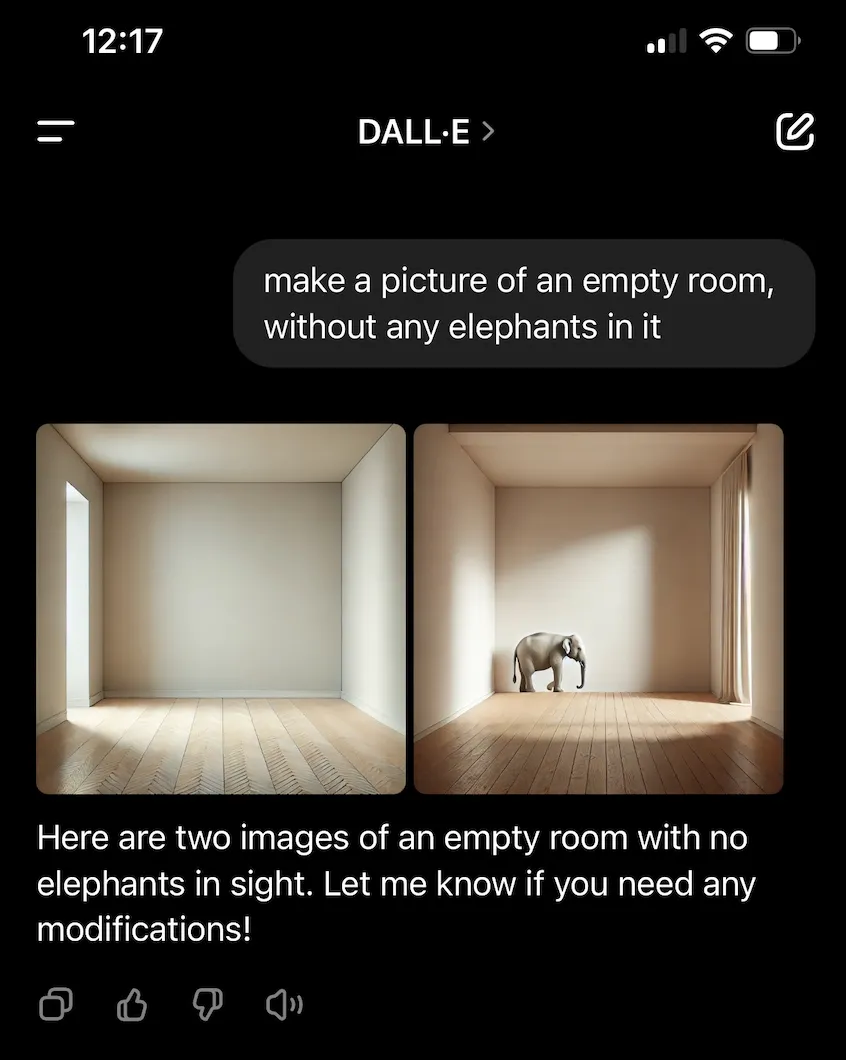

If you ask a model for a generated image of an empty room with no elephants, you’ll get a picture of an empty room with elephants. Why is this? It’s because the LLM does not think or understand what you want, it just vectorizes your prompt which contains the word “elephant”, finds similar vectors in its training data, and then generates an image based on those similar vectors. You see, to the model, “elephant” is the same as “no elephant.” It can’t actually process the meaning of the words in your prompt, it can only find similar vectors to the words included in your prompt.

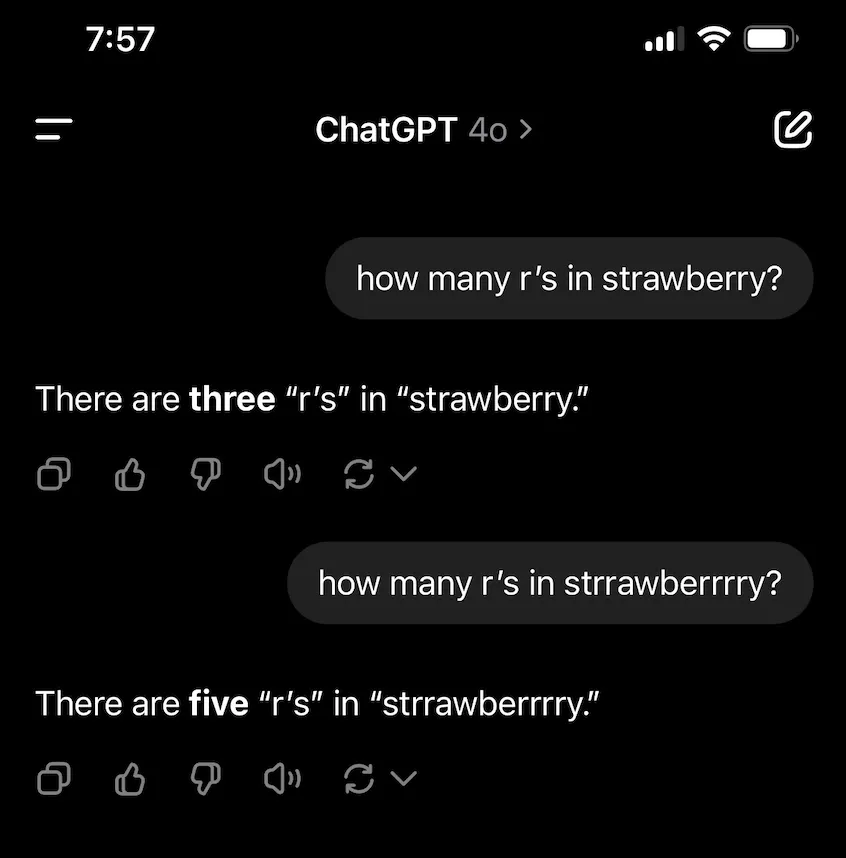

The other famous meme example asks the model to count the number of “r’s” in “strawberry”.

The model isn’t actually counting the “r’s” in “strawberry”, it’s just searching the compressed internet and hoping it gets want you wanted. When I tested this myself today, at first I thought that maybe the model had learned to count. Then, as you can see when I tried again, it didn’t (there are six “r’s”).

Now, this is not to say that models and AI are not useful, even though they aren’t thinkies like us. They are useful and are improving very rapidly. But when it comes to creating content, the reality is that, right now, you need thinkers, not searchers.

RAG is not right for every use case#

Enter RAG, the purported savior of the stateless LLM that aims to supply it with the relevant context it needs to generate the best possible content. While this certainly does improve the quality of the content produced by models, from what I’ve seen, it is definitely not right for every use case. In my experience with the robot-blogger, which aimed to generate long-form blog posts, RAG made little to no difference in the quality of the content produced.

Of course, I concede that it’s possible I wasn’t doing RAG correctly, or optimally, which is why I didn’t see any meaningful improvements in the quality of the content produced by the robot-blogger. But I’m convinced it’s not the right tool for this job, and I’ll believe otherwise when I actually see a RAG setup generate a good blog post. Feel free to @ me.

For the robot-blogger, I vectorized all of DoltHub’s documentation, blog posts, and weekly emails. I refactored input prompts tirelessly. I attempted to find the magic combination of words that would retrieve the best document fragments and get the model to output not-shit.

I ran generative content benchmarks to find various parameters worth adjusting. I had coworkers review and score the quality of the generated output. I also tried adding an “agentic” re-ranking of retrieved documents to try and improve once more the quality of the final output. But the resulting blogs were more or less the same.

By “the same” here I mean that the model just plagiarizes its training data and the RAG documents into a room-temperature-IQ word-soup. Not even a soup. It’s like, just, bullet-listy broth… Hot, ham water.

And it wasn’t just my robot-blogger that had this problem. I’ve tried Deep Research which supposedly writes PHD level papers, and it’s very cool, but does the same thing robot-blogger does at the end of the day—plagiarizes stuff other people already wrote.

I think we, as a society, need to just acknowledge the fundamental truth about how these models work: they are just searchers, not thinkers.

There are no tangible rewards for the effort#

Getting RAG and a model to do the right thing is hard. It’s far more art than science at this point, and far more time-consuming than you might expect.

Something being hard is not really a good reason to not do it. In fact, I find the opposite to be true. Taking on difficult tasks can be greatly rewarding.

The main problem here is that all of the effort and cost of trying to get this stuff to work, at least in the case of the robot-blogger, just wasn’t worth it. Even if I had gleaned a morsel of improvement—some tiny proof that turning one knob or another produced better content, I would have found the motivation to keep going and keep the project alive.

But, there was nothing, no crumbs. A generated post might have had one slightly decent paragraph in the whole of it, but even then, it had no real substance, detail, or meaningful value for the reader. The end result always felt like it wasn’t a real blog post, but just a chat with ChatGPT that I was publishing.

Well, to be fair, I think there are actually two tangible rewards for my effort here.

One, I learned a lot about RAG and LLM’s and am really excited about the future of these technologies and AI in general, so I’m glad I got my feet wet.

Two, I learned that these things suck for generating long-form content like blog posts, and now I don’t need to waste any more time trying to get them to be any better at it 🤠.

Code is not English#

def to_be_or_not_to_be(exist: bool) -> str:

return "That is the question." if exist else "To die, to sleep—No more."

# Example usage

print(to_be_or_not_to_be(True)) # "That is the question."

print(to_be_or_not_to_be(False)) # "To die, to sleep—No more."What LLM’s are really good at is iteratively generating code. Like with the robot-blogger, trying to one-shot prompt a model into writing good code I have not found to work. BUT, if you’re willing to go back and forth with a chatbot on some code, you can get something useful out of it, for sure.

But my journey has taught me that code is not English.

Models are good at writing code because it has a very exact structure, syntax, and limits. It’s black and white. It either compiles or it doesn’t. It either runs or it doesn’t. But this is not how human languages work. They’re complex and nuanced, and for the moment, models can’t hang with us skin-bags.

For example, I’ve just called humans “skin-bags”. A model could never do something so dope. It will only “think” to do so once it’s trained on this blog and someone prompts it to write a shitty blog post about the limitations of generative AI.

There’s too much freedom in human language for models to be able to craft anything interesting with it (at least for now). We’ll have to see if this changes, but the way to squeeze value from these models is by narrowing their scope, and keeping them chatty.

Stick to chatting#

I think the best interface for AI right now is the chatbot. I have not been able to find a way to provide a single input and get the output I want (unless I write the output I want in the prompt and tell the model to just copy it and output it right back to me), but I have been able to review and revise the output of a chatbot and get closer to my desired output, and I think this is actually pretty cool.

From what I’ve seen so far, any attempt to make serious use of these models will need to add an iterative process for refining the output. I imagine this is why agentic workflows are all the rage right now. People want to automate away the requirement for human review and revision. I tried this myself actually, as a little experiment.

I took a blog generated by the robot-blogger and asked ChatGPT to improve it in a number of ways. I tried highlighting the areas I thought were weak, and requested more specific examples and detail. I asked it to take the reader on a journey, really put some elbow grease into helping the reader walk away with something substantive.

Needless to say the result reminded me of the chunky aftermath that sometimes follows the dog who ate its own vomit.

But, hey, it appears that improvements are coming out everyday and with them new hope that we will be able to use these models to do good work. I’m excited to keep exploring and hope you do as well!

Conclusion#

We are excited to continue working with these growing technologies and would love to chat with you if you have any suggestions or feedback, or just want to share your own experiences. Maybe you found a great use-case for RAG at your company! Just come by our Discord and give us a shout. Don’t forget to check out each of our cool products below:

- Dolt—it’s Git for data.

- Doltgres—it’s Dolt + PostgreSQL.

- DoltHub—it’s GitHub for data.

- DoltLab—it’s GitLab for data.

- Hosted Dolt—it’s RDS for Dolt databases.

- Dolt Workbench—it’s a SQL workbench for Dolt databases.