Dolt is a decentralized database. In the past ten years or so decentralization has gone through a few hype cycles. I think we’re in a pro-decentralization hype period right now. Decentralization hype tracks the price of Bitcoin and last I checked, that’s near all time highs.

Decentralization in the Dolt context means open data that looks like open source: forks, branches, and pull requests. In service of Dolt’s decentralized mission, we think a cool database that would benefit from this model is Wikipedia. We got Dolt to work with Media Wiki and started importing a Wikipedia dump back in April. The Wikipedia database is on DoltHub. Is the import complete? Not even close. So, I thought it was time for an update.

How We Got Here#

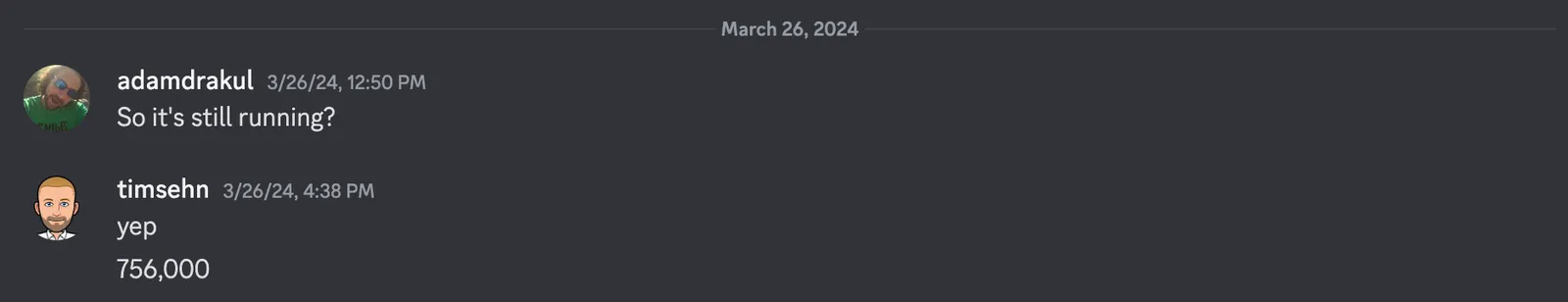

We have a Discord User Adam who really thinks Wikipedia should be published in an easily consumable form. He tried to get a dump into Dolt himself and could not. A lot of my ego is wrapped up in Dolt so I got mad and rage fixed. If it works with MySQL, it has to work with Dolt!

A few weeks later I had a working Media Wiki with a few hundred thousand Wikipedia pages imported. This is the first material commit of Wikipedia pages.

commit j70r7kdfr89p2c9kcpk6builn1t0m3s3

Author: timsehn <tim@dolthub.com>

Date: Wed Mar 13 18:48:25 -0700 2024

Progress to about 72,500

Fast forward seven months of import work and we’ve learned a ton about how to run Dolt at scale. I have to leave my Mac laptop at work, chugging along importing. I have a Windows machine at home. Frankly, it has been a nice break from rucking my laptop to and from work. Yes. I’m a walking commuter. We’re at about 7.2M pages imported.

commit 0rk1egtiio1536kthp12f1tv8k5l5e9l (HEAD -> main)

Author: timsehn <tim@dolthub.com>

Date: Thu Dec 05 09:57:18 -0800 2024

7,227,000 pages imported

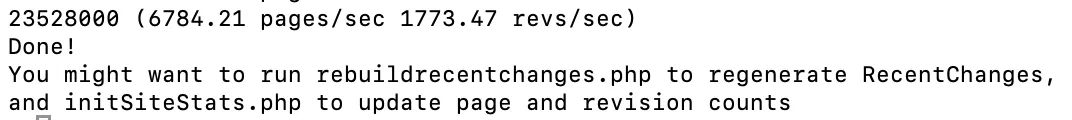

I started this task thinking there were 6.7M pages in Wikipedia. But, much to my chagrin, an article is not a page. There are 6.7M articles in Wikipedia. After surpassing 6.7M pages imported, I realized this. How many pages do I have to import? It turns out there is a --dry-run flag that processes the import but doesn’t run the SQL. This took about 4 hours to complete.

23.5M pages. It looks like I have a few more months of importing to go. I get about 40,000 pages per day in.

Why not parallelize the work you say? Well, the Wikipedia database uses full-text indexes. Dolt supports full-text indexes but can’t merge them. So, merges would have to rebuild the whole full-text index which would take an unknown amount of time and memory. I’ve considered modifying the import to remove the full-text indexes altogether. But, I’m kind of pot committed to my current approach. Give me a couple more months to get bored.

Why It Matters#

Wikipedia has been in the news lately with Elon Musk tweeting (errr…Xing…errr) at Jimmy Wales.

I think if you could create your own fork of Wikipedia easily, sync with the main version, and resolve conflicts as necessary, a few competing Wikipedias could emerge. Or, just having the threat of that outcome would hold the editors of Wikipedia more accountable and probably reduce any political bias that exists. Decentralized data governance for something as important as Wikipedia, the open repository of the world’s knowledge, would be a massive improvement.

Fork-able, Sync-able Wikipedia#

Imagine Wikipedia as a GitHub repository. Each page is a file. This is going to be a very big Git repository. You’re going to have trouble serving and editing it at web scale. So, Wikipedia is backed by a database, MySQL. To have a Git-style Wikipedia, you need a Git-style database that supports branch and merge. That is Dolt and it is MySQL-compatible. Dolt enables a fork-able, sync-able Wikipedia as described above.

Example#

This example is from the original MediaWiki article but still holds true. It’s a great example of the decentralized open data workflow that Dolt and DoltHub enable.

Set up a Local Branch to Edit#

First, you will need to clone the Wikipedia database. That will take a while. Follow the steps in the original article to get Media Wiki running against that database. Then, you’ll be all set up.

We’re going to make a new branch using the Dolt CLI. Navigate to the directory you cloned the Wikipedia database to, in our case ~/dolt/, and go into the directory called media_wiki. Then use the dolt branch command to create a branch, just like you would in Git.

$ pwd

/Users/timsehn/dolt/media_wiki

$ dolt branch local

$ dolt branch

local

* mainNow, we have a new branch called local to connect to.

Point your MediaWiki at the Branch#

Navigate to the root of your MediaWiki install and edit LocalSettings.php to point at your new branch.

$ cd /opt/homebrew/var/www/w/In the database section of LocalSettings.php you just add the branch name at the end of the database name.

## Database settings

$wgDBtype = "mysql";

$wgDBserver = "127.0.0.1";

- $wgDBname = "media_wiki";

+ $wgDBname = "media_wiki/local";

$wgDBuser = "root";

$wgDBpassword = "";Now, you are connecting to the new branch called local.

I can confirm this in the debug logs of the running SQL server.

DEBU[0251] Starting query connectTime="2024-04-03 11:39:25.297596 -0700 PDT m=+9.767598584" connectionDb=media_wiki/local connectionID=1 query="..."Make a Commit and Push#

Now let’s make a new page on our branch and then make a Pull Request on DoltHub.

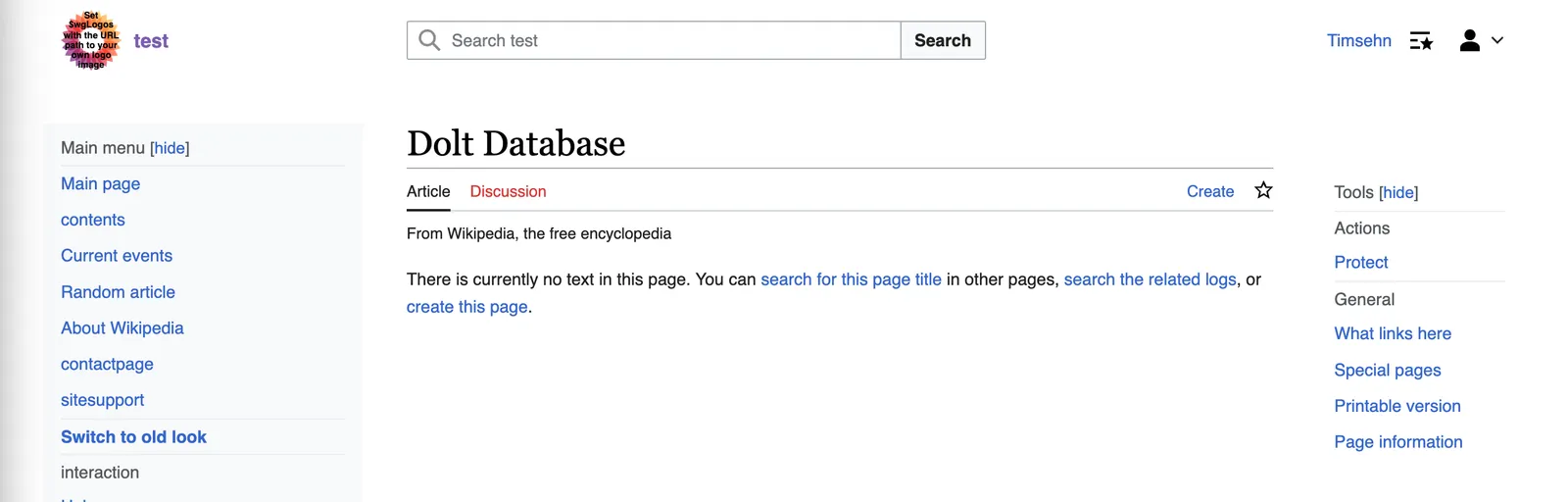

Click Create this page.

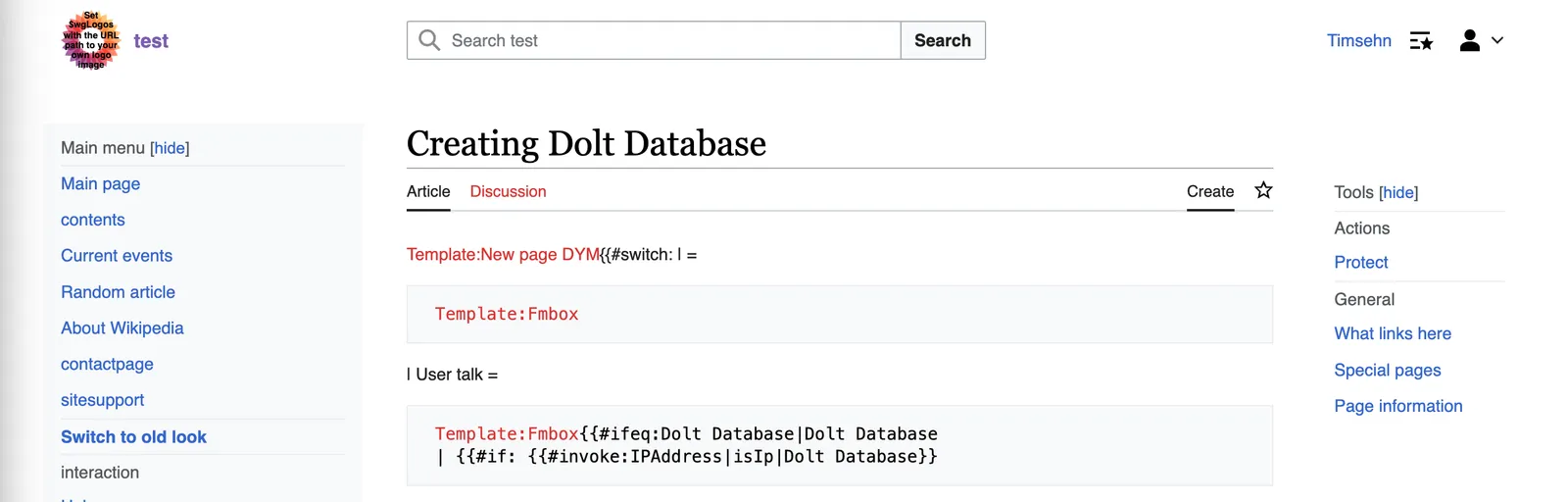

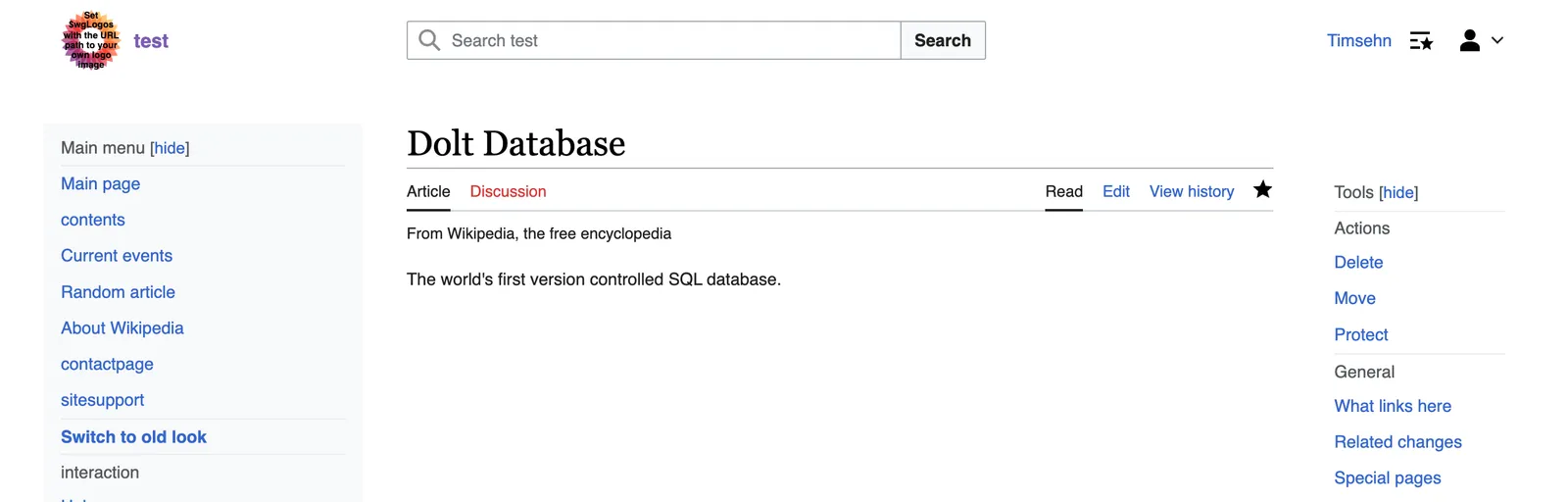

Create your article and you’ll end up with something like this:

Stop the SQL server and check out the local branch.

$ dolt checkout localYou can see what you’ve created by examining the diff. Take a moment to marvel how cool Dolt is. Unlike other databases, you can see what you changed!

$ dolt status

On branch local

Changes not staged for commit:

(use "dolt add <table>" to update what will be committed)

(use "dolt checkout <table>" to discard changes in working directory)

modified: watchlist

modified: slots

modified: module_deps

modified: recentchanges

modified: log_search

modified: content

modified: user

modified: job

modified: revision

modified: objectcache

modified: page

modified: comment

modified: searchindex

modified: logging

modified: text

$ dolt diff text

diff --dolt a/text b/text

--- a/text

+++ b/text

+---+---------+----------------------------------------------------+-----------+

| | old_id | old_text | old_flags |

+---+---------+----------------------------------------------------+-----------+

| + | 1209461 | The world's first version controlled SQL database. | utf-8 |

+---+---------+----------------------------------------------------+-----------+

$ dolt diff page

diff --dolt a/page b/page

--- a/page

+++ b/page

+---+---------+----------------+---------------+------------------+-------------+----------------+----------------+--------------------+-------------+----------+--------------------+-----------+

| | page_id | page_namespace | page_title | page_is_redirect | page_is_new | page_random | page_touched | page_links_updated | page_latest | page_len | page_content_model | page_lang |

+---+---------+----------------+---------------+------------------+-------------+----------------+----------------+--------------------+-------------+----------+--------------------+-----------+

| + | 1209460 | 0 | Dolt_Database | 0 | 1 | 0.651856670903 | 20240403191437 | 20240403191437 | 1211506 | 50 | wikitext | NULL |

+---+---------+----------------+---------------+------------------+-------------+----------------+----------------+--------------------+-------------+----------+--------------------+-----------+Now we’ll make a Dolt commit on our branch so we can send the changes to DoltHub.

$ dolt commit -am "Added Dolt database page"

commit n8v7boeva91c198ip4h1uichrl0thssp (HEAD -> local)

Author: timsehn <tim@dolthub.com>

Date: Wed Apr 03 11:59:44 -0700 2024

Added Dolt database page

Finally, we push our changes to DoltHub.

$ dolt push origin local

/ Uploading...

To https://doltremoteapi.dolthub.com/timsehn/media_wiki

* [new branch] local -> localOpen a PR on DoltHub#

Now, we want our changes reviewed and merged into the main copy of Wikipedia. Our local copy continues to have our new article and users of it can continue to enjoy our version. This is the beauty of decentralized collaboration. There can be multiple competing Wikipedias!

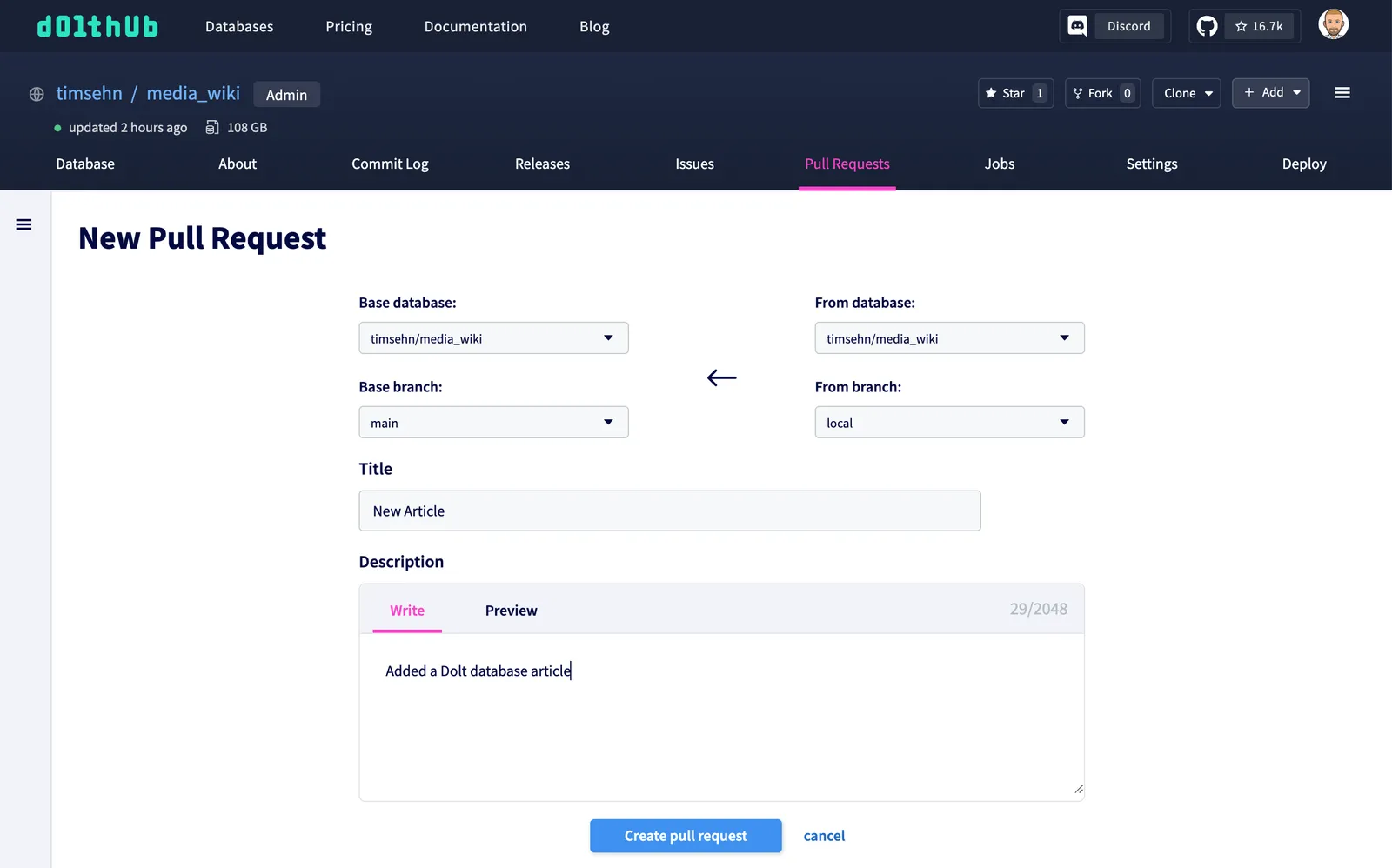

So, we open a Pull Request on DoltHub. Obviously, you could create your own coordination user interface using Dolt primitives. You don’t need DoltHub.

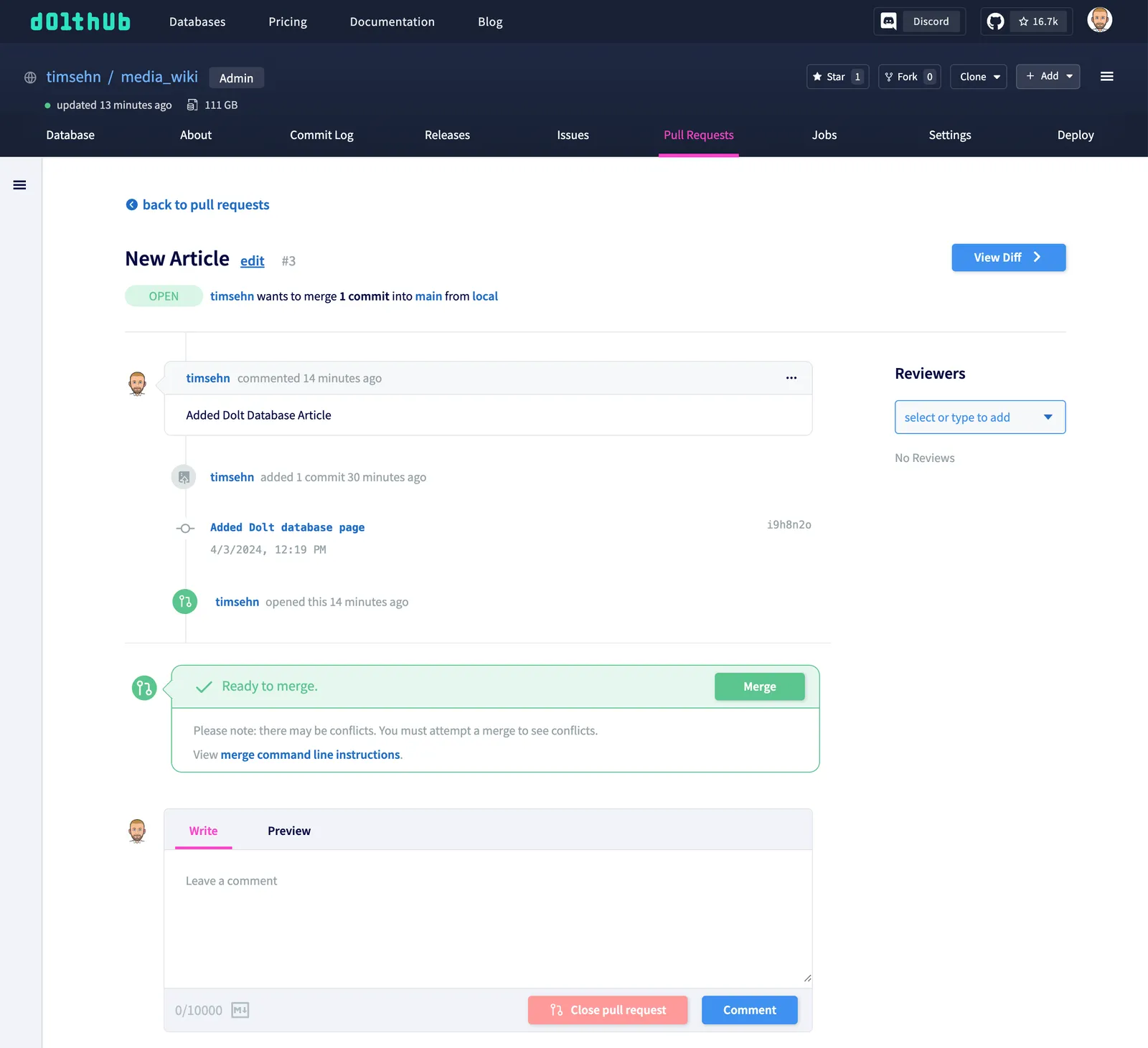

After submitting the form, I am greeted by the Pull Request page. I can send reviewers to this page to review and comment on my changes.

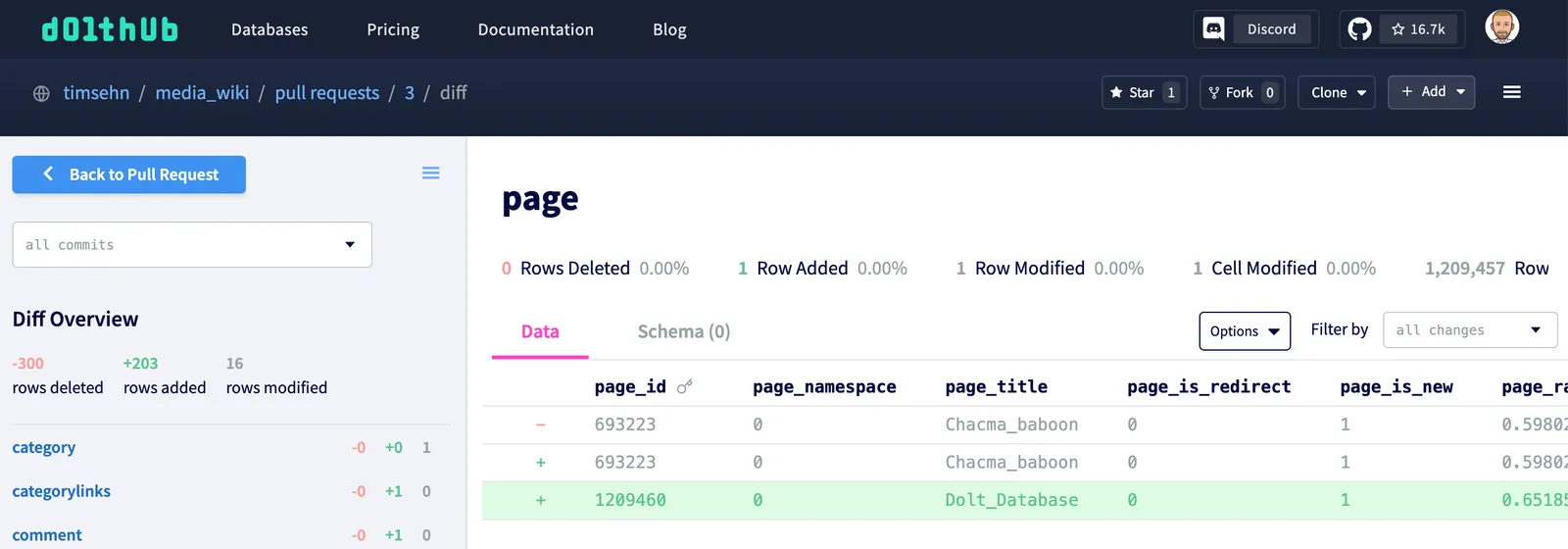

The reviewers can even review a diff.

We’re biased but we think this decentralized collaboration workflow has a lot of promise for data like Wikipedia. Can we get a decentralized encyclopedia with many competing versions? Dolt is here to help make that a reality.

Conclusion#

So, it’s going to be a few more months to have a full Wikipedia import into Dolt. You can follow along on the progress by looking at the commit log on DoltHub. If you’re interested in discussing this project come by our Discord and let’s chat.