Introduction#

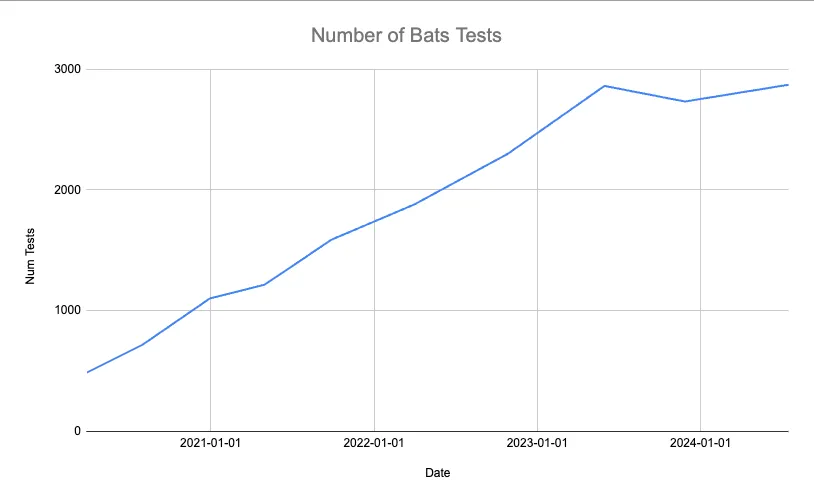

Over four years ago, we wrote about how we use Bash Automated Testing System (Bats) to test Dolt. We love bats for automated end-to-end tests of Dolt use cases that make use of the Dolt CLI. At the time that the blog launched, we had approximately 490 bats tests in the Dolt repository. Today, we have over 2,800, some of which are run in CI multiple times with different configurations. As Dolt’s functionality and the the corresponding bats test suite grew, the runtime overhead of the test suite became increasingly onerous for CI and local development workflows. Here is the growth of bats tests in the Dolt repository over time:

As the wallclock time to run the bats tests got longer and longer, various developers would take small excursions to try to improve things for themselves and their coworkers. For example, after moving to GitHub Actions, we eventually hard coded lists of test files which would run in separate, parallel test jobs, running multiple jobs per platform in parallel. We also had a number of passes at tools that would run the bats tests in parallel on a local machine. A major challenge of running the tests locally in parallel was that the tests themselves were not entirely setup to run in parallel on a single host. They were not always hygienic with regards to things like port utilization, $HOME directory utilization, etc. These attempts generally did improve things, and flakiness brought in by parallelism could generally be addressed by improving the tests, but they failed to be a general panacea. Typically, they would bring runtimes down by an appreciated step function, but eventually we would have to revisit the problem later.

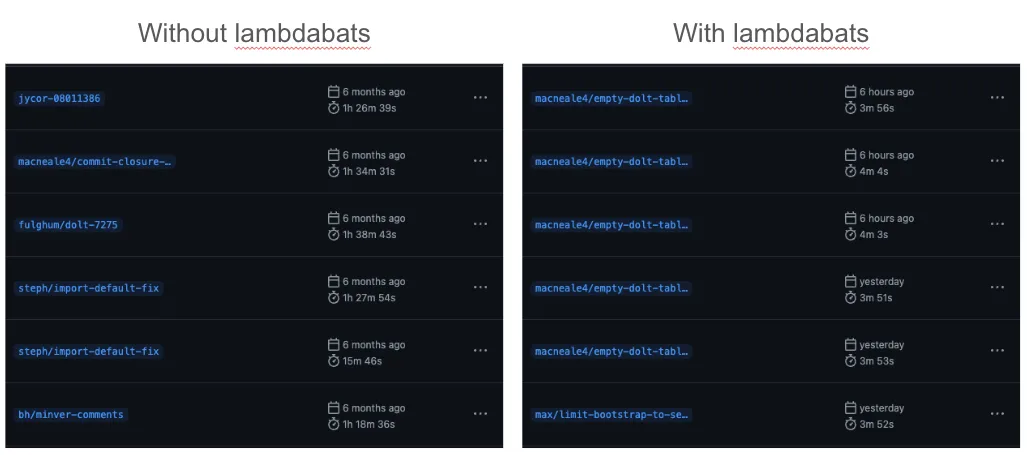

Late last year we developed a small tool which removed a lot of this pain—it allows us to run each individual bats test in a bats test suite as a separate invocation of an AWS Lambda function. We call it lambdabats. Here’s some workflow runs for a CI workflow on the dolt repository before and after lambdabats:

As you can see, the runtimes went from around one and a half hours to around four minutes. For developers, who already have lambdabats and the necessary toolchains installed and the dolt repository already checked out, lambdabats runs typically take between 25 and 60 seconds. Suffice to say, developers were pretty happy when lambdabats rolled out.

lambdabats#

Lambdabats is a test runner that runs a bats test suite by executing each individual test as an AWS Lambda function invocation. It takes advantage of the fact that AWS Lambda has low cold-start times and can scale to massive parallelism in a small amount of time, all while being only pay-for-what-you-use. The basic idea came from a 2019 Usenix paper named From Laptop to Lambda: Outsourcing Everyday Jobs to Thousands of Transient Functional Containers1, which describes a C/C++ toolchain which outsources the actual compilation and linking steps to transient lambda containers which can run with massive parallelism. The amount of work done by each invocation can be quite small, while the Lambda backend takes care of all the scheduling complexity involved in low cold-start times and routing requests to free resources. AWS Lambda’s execution model fits well with our existing bats test suite—we have thousands of tests to run and each test is relatively small.

On its face, lambdabats is quite simple. It comes in two parts, a server component and a client component. The server component is just an AWS Lambda function based on a container image which responds to HTTP request events. The incoming HTTP requests include information about where to find the test resources in an S3 bucket and which individual bats test should be run. The server function implementation idempotently downloads the required artifacts, invokes the requested test by shelling out to bats -f ..., captures the test output and success status, and returns the relevant results in its HTTP response.

The client component is invoked a bit like bats. Someone runs lambdabats ., or lambdabats testfile_one.bats testfile_two.bats. When it runs, it first builds the required binaries for the expected AWS Lambda function target platform. It then uploads archives of the binaries and the test files to content addressed storage in an S3 bucket, issues parallel requests to the Lambda HTTP function which implements the server component to run all of the requested tests, collects the HTTP responses, and provides diagnostic output for any failures, succeeding the run if all the tests pass successfully.

Here’s what a run of the lambdabats client looks like running on my laptop:

In principal, everything here is pretty straightforward. The gap between theory and practice can always be a bit hairy. To me, an impressive aspect of the previously mentioned Usenix paper is that they actually go through the trouble of getting everything polished end-to-end to the point where they can build things like Chromium. In the case of lambdabats, we ran into the following minor hiccups:

-

After adding a Cgo dependency to Dolt, we needed to make sure developers could locally cross-compile the binaries-under-test for the target test platform. This wasn’t too onerous —

lambdabatsneeded to be extended to download the needed C/C++ toolchain are part of compiling the binaries under test. -

The AWS Lambda container runs in a pretty strict sandbox. Some functionality is not available there. For example, the

bashthat ships with the container runtime does not interact well with the statically allocated/devmount that is given to your container, and so we needed to ship abashbinary that was compiled with different flags. We also had to forego running some tests inlambdawhich could not because they need to, for example, allocate virtual ttys by usingexpect. In cases where we couldn’t make the test work in the AWS Lambda function, we instead tagged them usingbatstest tags, and we made thelambdabatsclient optionally run them locally as part of the full test suite run. -

The

lambdabatsclient has to pick a concurrency to run the tests at, and if it picks too high, it can experience throttling from the server component. Because we do not currently have a lot of concurrent runs oflambdabatsitself, a static concurrency with aggressive exponential backoff works fine for us for now. -

As with any distributed test runner, the endpoint which runs the test ends up being an entry point for running pretty arbitrary code on some cloud infrastructure. We decided to use AWS IAM permissions to gate permissions to invoke the function itself. This works well because to run the tests you also need permissions to upload to the S3 bucket where the artifacts are stored, which can also be managed by IAM. Because lambdabats itself is the only client of this Lambda function, we can forgo any actual HTTP access for the function. We give developers access to IAM roles which grant them permissions they need, including permissions to invoke the function directly. The lambdabats server function itself is deployed to a separate AWS account and can run with very minimal permissions just granting it read access to the artifacts in the artifacts bucket.

Some other things stood out to us once lambdabats was deployed and in use.

(Lack of) Platform Diversity#

A potential downside of lambdabats is that AWS Lambda is only available for linux-x86_64 and linux-aarch64. We also run our bats tests on MacOS and Windows, and we have developers working on all three platforms. One of our major take aways from rolling out lambdabats is that quickly failing a test is very valuable in an iteration and code review cycle, especially if running the tests on other platforms is very likely to achieve the same results. In the end, given the very small amount of platform-specific functionality in Dolt, the marginal benefit of running the bats tests on all synchronously with a change on every target platform was determined to not be worth. Currently we run them asynchronously and alert the team if anything platform-specific actually fails.

Advantages of a Dedicated Test Runner#

Having a common entrypoint to run a test suite, which is under the control of the repository owners, can be very nice. It’s why some people recommend a Makefile with, amongst other things, a test: target in it at the toplevel of a Golang project, despite the fact that many Golang projects will adhere to go test ./... conventions.

Up until lambdabats was deployed, developers and CI had just been invoking bats manually, after whatever setup steps were necessary. Because there was no dedicated test runner, the version of bats itself was subject to variation between individual developers’ machines. And some aspects of the environment itself could also impact the tests. It was documented, but had to remembered and executed by everyone on the team, how to install the right binaries from the workspace under test and then appropriately invoke the bats tests. Sometimes people would forget to install some of the helper binaries, and their test run would end up capturing the results of testing an up-to-date dolt binary against an out of date remotesrv binary, for example.

Now lambdabats is a single place to codify how dependencies get built, what the environment looks like when the tests are invoked, and which versions of bats and other system dependencies get used.

It also gives us a nice place to add test runner functionality as we see fit. For example, lambdabats is so fast, and typically interacted with as a CI job, that we changed its output to print all failing tests last. And it’s easy to extend the lambdabats client to run a failing test multiple times, checking for flakiness, for example.

Cost#

lambdabats is reasonably cost effective, despite the fact that Lambda itself is far from the cheapest compute available on AWS. In June 2024, we ran 1,446,780 bats tests with lambdabats. It cost us $106. The 1.4 million tests run corresponds to about 500 runs of Dolt’s entire test suite, which takes about 90 minutes on a GitHub action runner. The corresponding GitHub action runner cost would be about $0.008 / minute at 90 minutes / run for 500 runs, totaling about $360. This calculation does not take into account GitHub’s free tier for open source projects, of which Dolt gets to take advantage; it’s just meant to show that lambdabats is by no means breaking the bank compared to another near-at-hand alternative.

The Future of lambdabats#

For now lambdabats is serving us well, without needing much recent maintenance or support. There are couple things I would like to eventually clean up and some improvements that seem like likely wins:

-

Currently lambdabats is

dolt-specific. It hard codes which binaries to build, how to build them, where to upload them, which IAM roles to try to assume, etc. Ideally all of that would be configurable with files in the test suite of the project itself, and lambdabats would be project-agnostic. -

Relatedly, lambdabats currently doesn’t do a great job of versioning the container version that the tests are run in. Ideally the project configuration would choose its container version and bump it when it saw fit, which would improve the chances that previous commits of the project would still work with

lambdabatsinfrastructure in the future, even when the project@mainadopts a new version ofbats, for example. -

lambdabats is in a good position to keeping statistics on memory, CPU and wallclock time utilization of tests and to feed those into simple infrastructure that could surface them over time. Easy access to such information could inform developer’s when they’re making changes or trying to optimize things in the future.

-

As mentioned above,

lambdabats’s concurrency and backoff behavior is not currently well tuned for frequent concurrent runs of the client against the same server endpoint. A dynamic concurrency limit with additive increase/multiplicative decrease would probably be a better fit if concurrentlambdabatsinvocations are much more frequent in the future. -

Uploading binary and test artifacts can take an appreciable amount of time for

lambdabats.lambdabatscurrently uses content-addressed storage to avoid uploading artifacts which have already been uploaded, but thedoltbinary itself is over 100MB and is usually changing when a user is runninglambdabats. Generally, even when there are changes, only a small portion of the files have actually changed. It would be neat to see if upload times could be improved by taking advantage of content-aware chunking, just like dolt itself does; in this case, cribbing off ideas from something like casync or restic.

Conclusion#

lambdabats improved our end-to-end CI times and made it cheaper and faster for our developers to locally run our bats test suite before submitting a PR. AWS Lambda ended up being a great fit as the runtime for a test runner on a project where there was no need for any infrastructure most of the time but multiple times a day there was the possibility to speed things up if we just had access to thousands of ephemeral containers at once. lambdabats itself is open source—it’s a bit rough around the edges and it’s currently very dolt-specific. If you have any questions or want to check about it, drop us a line on Discord.

Footnotes#

-

Please note, the cited Usenix paper has a lot of cool stuff involving how a

make-style build is transformed into cloud function invocations without any need to interact with the software project itself—the massive parallelism on low-cold-start pay-as-you-go FaaS infrastructure is just a very small piece, but it was a primary inspiration forlambdabats. ↩