A plugin architecture using golang interface extension

We're building Dolt, the world's first version controlled SQL database. Dolt's SQL engine is go-mysql-server, which started its life as an independent open source project. Although our primary development effort on go-mysql-server is to support Dolt, it remains a generic SQL execution engine that you can use to run SQL queries on any data store by implementing a handful of golang interfaces.

In this blog post, we'll discuss a design pattern that makes it possible for integrators with go-mysql-server to implement a small subset of the interfaces and still get a lot of value out of the engine.

Lots of interfaces

To start with, why do we care how many interfaces integrators need to deal with? There are two answers to this question.

First, by making go-mysql-server easy to use and useful to as many different projects as possible, we hope to attract better bug reports and more open source contributions. This makes the engine better, which makes Dolt better.

Second, there are a lot of interfaces defined by the engine.

% grep interface core.go | wc -l

103To be fair, some of these are used internally only, but most of them

are ones we expect engine integrators to implement. To start, everyone

integrating with the engine needs to define a DatabaseProvider which

provides Databases, a Database which provides Tables, and

Table which provides RowIters. These interfaces look like this:

type DatabaseProvider interface {

Database(ctx *Context, name string) (Database, error)

HasDatabase(ctx *Context, name string) bool

AllDatabases(ctx *Context) []Database

}

type Database interface {

Nameable

GetTableInsensitive(ctx *Context, tblName string) (Table, bool, error)

GetTableNames(ctx *Context) ([]string, error)

}

type Table interface {

Nameable

String() string

Schema() Schema

Partitions(*Context) (PartitionIter, error)

PartitionRows(*Context, Partition) (RowIter, error)

}Implement these 3 interfaces with 11 total methods for your data source, and you can start querying it with SQL, including a wire-compatible server. Not a bad deal at all.

So where do the other 100 interfaces come into play?

A plug-in architecture using Golang interface extension

It's easy to get started writing a backend to the engine with the above interfaces, but it will have has very limited capabilities. Among the things it won't be able to do that a full featured backend can:

CREATE TABLE,ALTER TABLE, and other DDL statementsINSERT,UPDATE,DELETEAUTO_INCREMENTcolumns- Indexed lookups or joins

FOREIGN KEYorCHECKconstraints- Triggers

- Views

- Custom functions

- Stored procedures

But don't worry! You don't have to bite off all this work in one go. All the functionality is unlocked in a modular fashion, in pretty small bites.

For example, if you want your tables to be able to accept INSERT

statements, you can implement these 3 interfaces:

type InsertableTable interface {

Table

Inserter(*Context) RowInserter

}

type RowInserter interface {

TableEditor

Insert(*Context, Row) error

Closer

}

type TableEditor interface {

StatementBegin(ctx *Context)

DiscardChanges(ctx *Context, errorEncountered error) error

StatementComplete(ctx *Context) error

}Note that this design is coherent: it's not possible to implement

InsertableTable without also supplying an implementation for a

RowInserter, which needs the methods from TableEditor.

Similarly, if you want to add index lookup capabilities to your tables, you implement this interface:

type IndexedTable interface {

IndexAddressableTable

GetIndexes(ctx *Context) ([]Index, error)

}

type IndexAddressableTable interface {

Table

IndexAddressable

}

type IndexAddressable interface {

WithIndexLookup(IndexLookup) Table

}Note that here you have two choices: you can declare a table that

supports native indexes with IndexedTable, or you can defer to some

external index provider and only implement IndexAddressableTable.

That's the pattern. If you want to unlock more functionality in the engine, simply implement additional interfaces. Each one is a small, manageable chunk of work so you can develop your backend iteratively.

Interface extension in Golang

How does this all work on the engine side?

Like many strongly typed languages, go allows you to assert that an

object has a different runtime interface than its static

declaration. Go actually provides two (or three) different methods to

do this. For this example, we'll be checking if a sql.Table supports

sql.InsertableTable.

You can make a naked type assertion, like this:

func getInsertableTable(t sql.Table) (sql.InsertableTable, error) {

it := t.(sql.InsertableTable)

return it, nil

}This will panic if the assertion fails, which makes it unsuitable for this pattern. We want to allow graceful recovery in the case that integrator hasn't implemented the extension. So instead, we can use the second form of type assertion:

func getInsertableTable(t sql.Table) (sql.InsertableTable, error) {

it, ok := t.(sql.InsertableTable)

if !ok {

return nil, ErrInsertIntoNotSupported.New()

}

return it, nil

}Finally, for our use case there are actually many sub-interfaces possible that we need to handle, so we often write this logic as a type switch like so:

func getInsertableTable(t sql.Table) (sql.InsertableTable, error) {

switch t := t.(type) {

case sql.InsertableTable:

return t, nil

case sql.TableWrapper:

return getInsertableTable(t.Underlying())

default:

return nil, ErrInsertIntoNotSupported.New()

}

}As you can see above, when you try to run an INSERT query on a table

implementation that doesn't support it, your query will fail with the

message table doesn't support INSERT INTO.

We use this pattern extensively throughout the SQL engine, which lets integrators provide as much functionality as they need to and no more.

Advantages of interface extension in a plug-in architecture

Astute readers are probably asking: why not just combine all the interfaces together and then let integrators throw errors for the unimplemented methods? Something like this:

type Table interface {

Nameable

String() string

Schema() Schema

Partitions(*Context) (PartitionIter, error)

PartitionRows(*Context, Partition) (RowIter, error)

// InsertableTable methods

Inserter(*Context) RowInserter // just return nil if you don't support this

// DeletableTable, UpdateableTable, etc. methods below...

}The most concrete reason not to do things this way is that as you develop the framework over time, you'll constantly break any existing integrators, since they will no longer satisfy an interface when you add methods to it. It would also require us to provide some sort of "not implemented" semantics on all these methods (like a return parameter or a special error type), rather than letting the language's type system do this for us. As an open source project, we can't control who takes a dependency on us or enforce that they keep it up to date as we change it. We have made breaking changes in the past, but we try to do so very sparingly, definitely not every time we add a new feature to the engine. For that use case, we almost always define a new interface.

The other arguments for interface extension mostly boil down to aesthetics: it's gross to have to provide error implementations for so many methods. This isn't a major consideration for us (all of our code is gross), but people do care about this. A lot. It bothered Java developers enough that they got Oracle to introduce default method implementations for interfaces.

Interface extension in other languages

Golang isn't the only language where this pattern is possible -- far from it. Here's how it looks in Java:

public interface Table {

public Schema schema();

...

}

public interface InsertableTable extends Table {

public RowInserter inserter(Context);

}

...

try {

InsertableTable it = (InsertableTabl) table;

} catch (ClassCastException e) {

throw new UnsupportedException("InsertableTable");

}In addition to statically typed language like Java and Go, this design pattern is even easier in dynamically typed languages like JavaScript and Python -- you just don't get any help from the compiler to enforce correctness.

Performance implications

Interface assertions in golang are relatively cheap but certainly not

free. We were experimenting with a variation on this pattern when

testing out a new row storage format to see how it would impact

performance, and was surprised to see runtime.assertI2I was taking

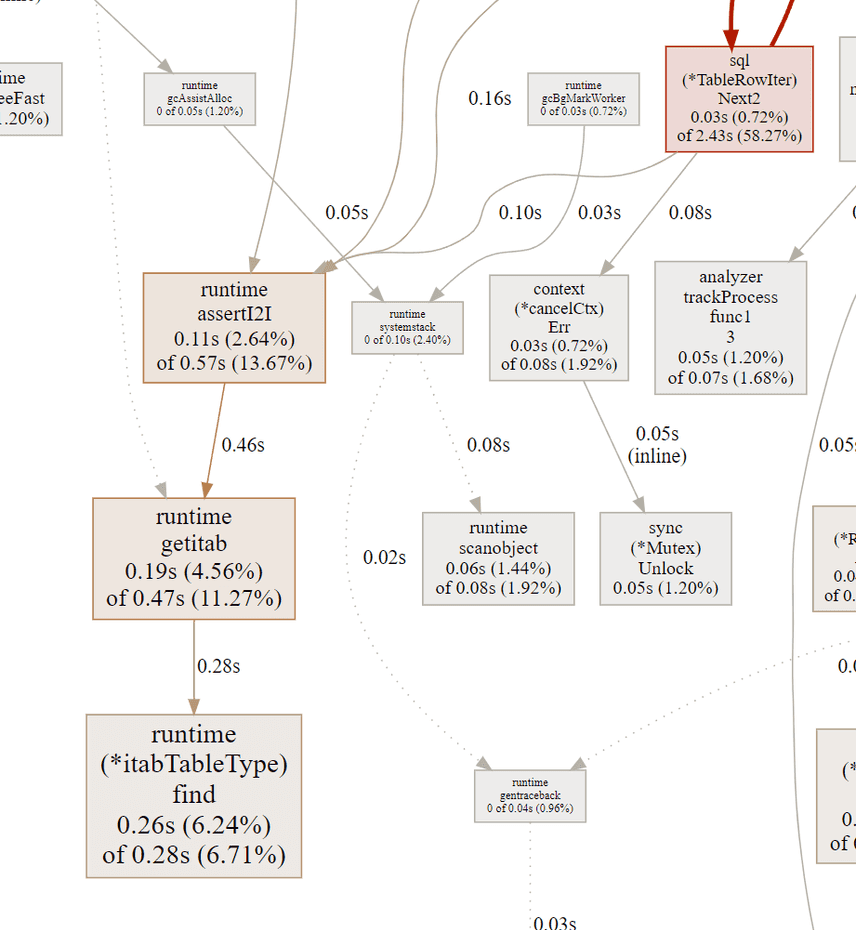

up a full 13% of our CPU time:

What's going on here? Basically, we were doing something like this:

type RowIter interface {

Next(ctx *Context) (Row, error)

Closer

}

type RowIter2 interface {

RowIter

Next2(ctx *Context, frame *RowFrame) error

}

...

func (i *TableRowIter) Next2(ctx *sql.Context, frame *sql.RowFrame) error {

i2 := i.childIter.(sql.RowIter2)

return i2.Next2(ctx, frame)

}It's not easy to tell without understanding the structure of the

overall row iteration code, but the call to assert sql.RowIter2 is

effectively taking place inside a tight loop. For every row fetched,

we were performing this interface conversion, which incredibly is more

expensive than going to disk and deserializing a row (at least once

the OS caches the page in memory).

The reason this call is expensive gets into the internals of the golang runtime. If you're interested, Planetscale did a great write-up of how the same performance penalty can apply to golang generics.

The bottom line: if you use this pattern, don't do it in a tight loop where performance is critical.

Conclusion

The interface extension design pattern is well suited for plugin architectures like the one used by go-mysql-server. It's a great way to make it easier for people to integrate with your project, and is flexible and easy to implement.

Questions? Comments? Thoughts about SQL engines or databases? Come talk to us on Discord.