Transforming postgres dumps to MySQL dumps

Introduction

We are building Dolt, the only SQL

database that you can branch and merge, fork and clone, push and pull

like a Git repository. For Dolt's SQL interface, we chose to implement

the MySQL dialect and wire protocol, simply because MySQL is the most

popular free SQL database by a large margin. Customers currently

running MySQL can use Dolt as a drop-in replacement without changing

any application code, and migrating data from MySQL to Dolt is as easy

as running a mysqldump followed by a dolt sql < dump.sql.

But not everyone uses MySQL. As you might expect, a very frequent question we get is: my company uses Postgres, how can we use Dolt? We're in the early phases of a Postgres compatible version of Dolt to make migration painless for Postgres shops. But that won't see daylight for a while yet.

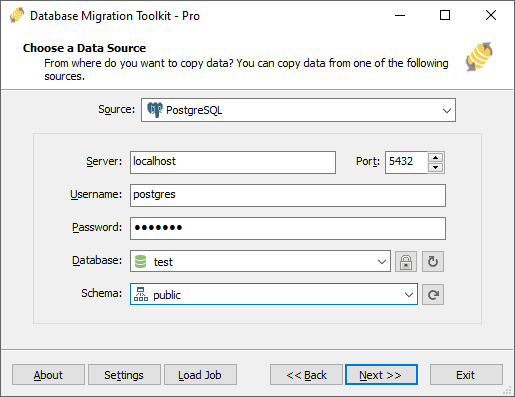

For now, the way to use Postgres with Dolt involves migrating your Postgres Data into a MySQL format so that Dolt can ingest it. So how do you get Postgres data into MySQL?

Today we're excited to announce what we think is the best free and open source script to convert a Postgres dump into a MySQL dump: pg2mysql. Go check it out on GitHub, or keep reading to hear how it works.

Postgres into MySQL: state of the art

To start, let's answer the obvious question: why did we write this script?

Let's look around at the state of the art for getting Postgres data into MySQL. There are several commercial options for between $50 and $300 that have decent reviews but which I have not evaluated personally. Charging money is a non-starter for our use case: we don't want any barrier for customers who are experimenting with Dolt.

On the free side of things, there are libraries like SQLAlchemy that claim to be able to convert any supported database format into any other. Our python library, DoltPy, uses SQLAlchemy under the hood. It works decently well at this task, well enough that one customer recently was able to migrate his Postgres database to Dolt using it. But it wasn't easy: it required writing custom code and quite a bit of massaging of the schema and data, as well as a couple weeks of back-and-forth support on our Discord server.

What we really wanted is a free, no-touch script that takes a

pg_dump file and converts it to the MySQL equivalent. Sadly, such a

thing didn't appear to exist. The closest we could find was

pg2mysql, basically a

command-line port of another web-based

solution. It's surely better

than nothing, and works as a first pass for conversion. But it didn't

fully work on the data I tried converting with it, generating schemas

that MySQL couldn't parse (because of incorrect or nonsensical types),

values that couldn't be inserted, ALTER TABLE statements that

wouldn't parse, etc. It was also missing a lot of really important

features, like support for foreign keys and other constraints.

In short, it wasn't good enough to hand to customers. So I set out to write one that was.

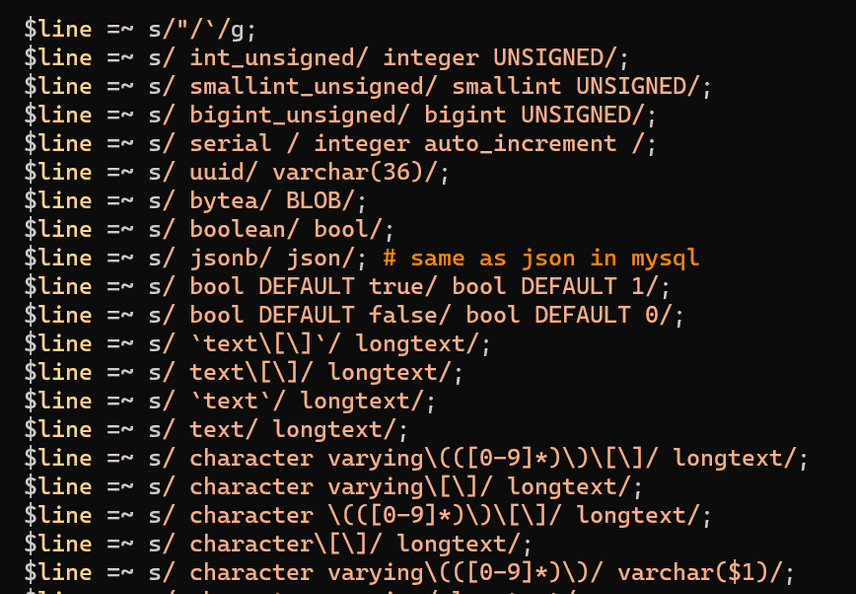

Perl regex: there's nothing it can't do badly

As I have several times previously, I decided to solve this messy problem with perl. Why perl? Because no other language approaches perl's expressiveness when it comes to processing and manipulating lots of raw text, which is what I needed to do. That's what most of the script is: just lots and lots of lines translating types to their MySQL equivalents.

Those are pretty rote, and I assembled them through a combination of reading the original pg2mysql source, Postgres documentation, and trial and error. They're ultimately boring.

There are a couple interesting bits in the script, though. The first

one is handling long, multi-line strings in INSERT statements, which

is an important use case for Dolt. This has to work correctly for

things like embedded single quotes. Oddly enough, I found that

pg_dump appears to have a bug regarding escaped quotes. It will

translate a string that includes an escaped single quote character

like so:

An escaped single quote: \'

Becomes

An escaped single quote: \''This is just incorrect: the backslash was already escaping the single

quote, so escaping it with '' effectively un-escapes it, ending the

string literal early and causing parse errors. That's easy to fix with

a simple regex substitution:

$line =~ s/\\''/\\\\''/g;A slightly tricker problem is keeping track of when an INSERT

statement ends. We need to do this because we modify all INSERT

statements to enclose field names in backticks, without which some

will not parse correctly. To do this, we just need to keep track of

how many double quotes are on a line and whether we're in the middle

of an insert currently or not. Perl makes both these tasks easy:

# Count single quotes

my $quotes = () = $line =~ m/'/g;

my $statement_continues = 1;

if ( $line =~ m/\);$/ ) {

# the above is a reasonable heuristic for a line not

# continuing but isn't fool proof, and can fail on long text

# lines that end in );. To do slightly better, we also keep

# track of how many single quotes we've seen

if ( (!$in_insert && $quotes % 2 == 0)

|| ($in_insert && $quotes % 2 == 1) ) {

$statement_continues = 0;

}

}Handling sequences

Postgres has database-managed sequences that are commonly used to

generate keys for tables. These are first class schema entities that

have no direct equivalent in MySQL, so can't be supported

directly. But the common use case, where a single column should

automatically increment on every insert, does have an equivalent in

the AUTO_INCREMENT column property. Our script does its best to

perform this conversion automatically.

# For tables with a sequence for an ID, pg_dump does the following:

# 1) create the table with no keys

# 2) create a sequence for that table

# 3) alter table to set the default to that sequence nextval

# 4) inserts

# 5) alter table to add primary key

#

# This doesn't work in mysql. 2) and 3) are not supported at all,

# and a column can't be set to auto_increment unless it's a key in

# the table. So instead, when we see this pattern, we defer

# auto_increment changes until after the primary key changes. This

# also makes assumptions about the type of a primary key column

# which may not be accurate.

#

# ALTER TABLE public.account_emailaddress ALTER COLUMN id SET DEFAULT nextval

if ( $line =~ m/\s*ALTER TABLE (\S+) ALTER COLUMN (\S+) SET DEFAULT nextval/i ) {

push @deferred_ai_statements, "ALTER TABLE $1 MODIFY `$2` integer auto_increment;";

$line = "";

}

}On a side note: Dolt didn't support altering a column to add the

AUTO_INCREMENT property, so I had to undertake a kind of lengthy

detour to add

it.

Escape hatch: INSERT IGNORE and skipping whole tables

Some Postgres dumps contain values that MySQL just can't accept, for

example timestamp values of 0001-01-01. (MySQL can't represent

dates or times before the year 1000). For these cases, it's useful to

be able to be more lenient, silently truncating or applying defaults

where necessary, which is what the INSERT IGNORE syntax in MySQL

does. You can enable this behavior with the --insert_ignore flag.

For some tables, even that might not be enough, usually because they

use some data type not supported in MySQL, such as tsvector. When

nothing else is working and you just want a proof of concept, you can

skip those tables entirely with the --skip flag.

We need your help

We've tested this script on several pg_dump files, but we need more eyeballs and users to find the things we've missed or gotten wrong. The only way a project like this succeeds is by getting tested by lots and lots of examples that we can't make ourselves. We're confident this is the best free and open source tool of its kind available, but that isn't really saying much. There's surely lots of bugs, so help us find them and let us know about them by filing issues.

Conclusion

We get asked for Postgres support several times a month. It's coming, but will be a while. In the meantime, if you're interested in trying out Dolt, this script could help bridge the gap. Give it a shot and let us know how it goes. Or if you're not a Dolt customer, and are one of the relatively few people migrating off of Postgres onto MySQL, we hope this script makes your life a little easier.

Questions? Comments? Join our engineering team on Discord and start a conversation.